You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

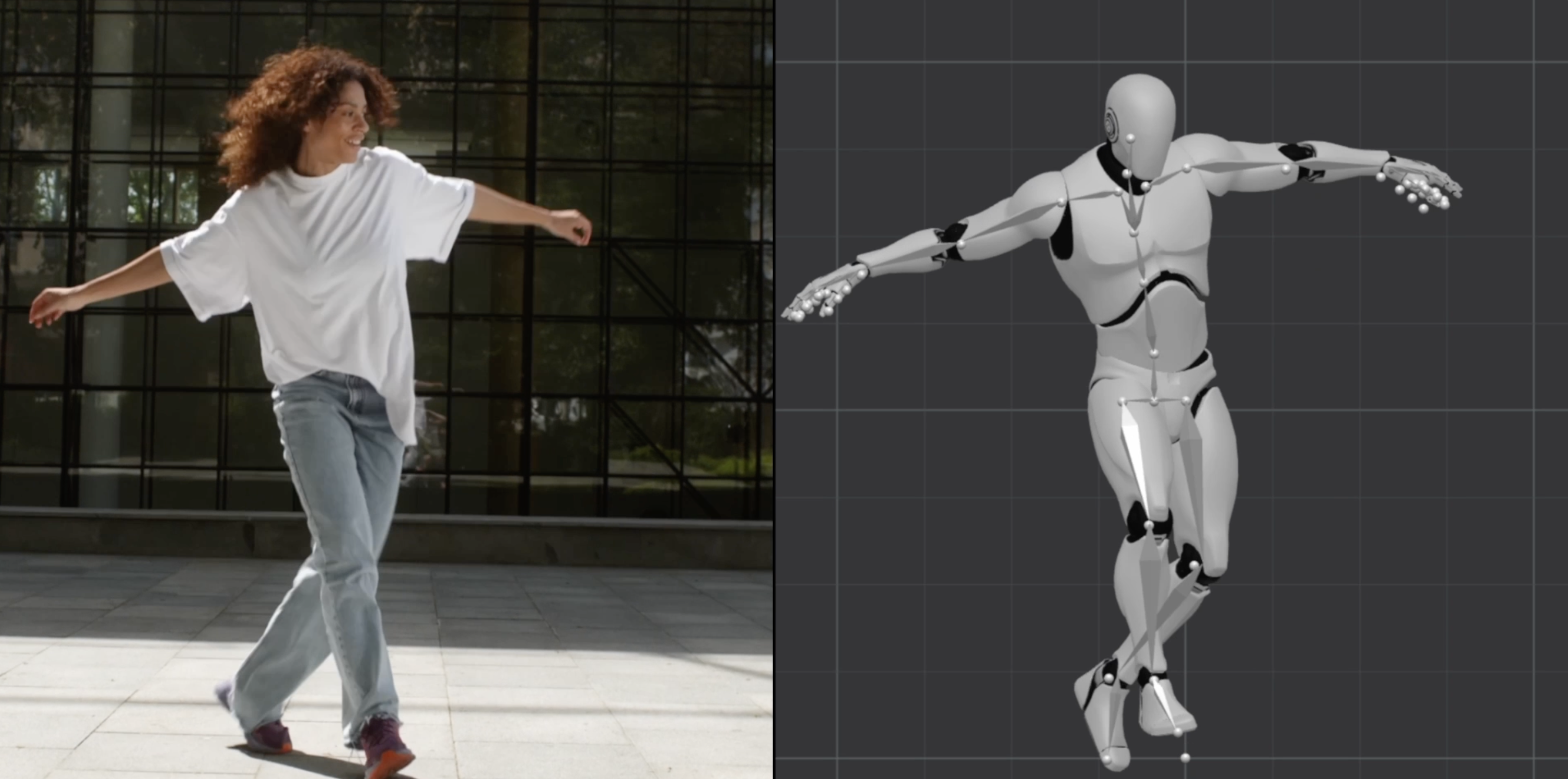

I found Ai / machine learning to convert video to mocap

- Thread starter vabber

- Start date

Looks cool but their pricing and tier limits are ridiculous making this thing unusable for anything - https://www.deepmotion.com/pricing

ajtrader23

Active member

Imagine stuff like Kitty MoCap, but coming from any traditional 2D video you like. Where are we on this tech?

yasparukko

Active member

We don't really need this, its very easy to create mocap animations in VAM using any VR headset, oculus quest 2 for example

Hint: you don't have to record the whole body at once, record one hand, rewind, record another hand, rewind, record hips, head, and so on, very easy and fun!

Hint: you don't have to record the whole body at once, record one hand, rewind, record another hand, rewind, record hips, head, and so on, very easy and fun!

We don't really need this, its very easy to create mocap animations in VAM using any VR headset, oculus quest 2 for example

Hint: you don't have to record the whole body at once, record one hand, rewind, record another hand, rewind, record hips, head, and so on, very easy and fun!

Whoa, that’s news to me, bigly!!!! Any direction you can point me in for more info on how to do this? I’ve got a quest 2 and had no idea it was capable of mocap

ajtrader23

Active member

We don't really need this, its very easy to create mocap animations in VAM using any VR headset, oculus quest 2 for example

Hint: you don't have to record the whole body at once, record one hand, rewind, record another hand, rewind, record hips, head, and so on, very easy and fun!

Maybe I wasn't clear - I'm not interested in spending time creating brand new mocaps. I would love to take existing, 2D videos that I already enjoy, and transpose those actions into Virt-a-mate.

Some kind of scanner / importer. Something well beyond my development skills!

yasparukko

Active member

Yes, you can use builtin VAM animation recording or you can use Timeline recording, it works more or less the same, but Timeline is probably better because you can have multiple animations at the same time.Whoa, that’s news to me, bigly!!!! Any direction you can point me in for more info on how to do this? I’ve got a quest 2 and had no idea it was capable of mocap

You select controllers you want to animate, start recording and move selected controllers the way you want, then repeat with another controller

Record screen

Advanced and intuitive keyframe animations for Virt-A-Mate - acidbubbles/vam-timeline

Plask Motion: AI-powered Mocap

AI motion capture from video, transforming your videos into stunning animations.

plask.ai

this one is intriguing and much more sane on the pricing

Looked into video-to-mocap solutions and all tools seem to be online-/browser-only. Disappointing!

Unusable with slow upload speed, expensive and with ridiculous limitations. Give me an offline solution.

Doubt that performance is that reason for that. Just a matter of time until this changes. It was the same for AI video upscaling.

Unusable with slow upload speed, expensive and with ridiculous limitations. Give me an offline solution.

Doubt that performance is that reason for that. Just a matter of time until this changes. It was the same for AI video upscaling.

Jocks3D

Active member

I just posted about this subject in another thread where I'm having trouble with the .bvh files both Deep Motion and Plask.ai generate. Neither play with BVH Player in VaM although I have other .bvh files downloaded elsewhere that play fine.

But I can say that Deep Motion could be a very useful tool for making poses if we can get it to work. You get 30 free seconds/credits per month. I would not use it for mocap of a 2D video to 3D figure, I think Plask.ai is better for that. But Deep Motion lets you import a 2D photo and turn it into a 3D figure and this works pretty good. It only costs one credit/second per photo so you can do 30 poses a month for free. A good source for full body model poses is at www.fineart.sk

Plask.ai is what I've been playing with for 2D video to 3D figure mocap. You only get a limited number of free uses per month, I think it's around 30 seconds. But if you do this a lot it might be worth it. I found a good source for 2D full body videos that have a solid color background (white, black or green) are on stock footage sites like Shutterstock. You can download their preview videos and import these into Plask, the watermark on the file doesn't seem to impact the final animation. The final 3D mocap appears to be good quality although like I said I have not been able to check this with a VaM model using the BVH Player plugin. YouTube is also a good source for videos to convert to mocap, such as dance videos.

I also did not know you could create mocaps with the Quest 2. I'm still not clear on the process. Are you supposed to possess a model and move them around as you would in real life, recording it? In that case what are you doing, making fucking motions into the air... or another person?

I wish someone could make a tutorial video on how to use the Quest 2 for mocap in VaM.

But I can say that Deep Motion could be a very useful tool for making poses if we can get it to work. You get 30 free seconds/credits per month. I would not use it for mocap of a 2D video to 3D figure, I think Plask.ai is better for that. But Deep Motion lets you import a 2D photo and turn it into a 3D figure and this works pretty good. It only costs one credit/second per photo so you can do 30 poses a month for free. A good source for full body model poses is at www.fineart.sk

Plask.ai is what I've been playing with for 2D video to 3D figure mocap. You only get a limited number of free uses per month, I think it's around 30 seconds. But if you do this a lot it might be worth it. I found a good source for 2D full body videos that have a solid color background (white, black or green) are on stock footage sites like Shutterstock. You can download their preview videos and import these into Plask, the watermark on the file doesn't seem to impact the final animation. The final 3D mocap appears to be good quality although like I said I have not been able to check this with a VaM model using the BVH Player plugin. YouTube is also a good source for videos to convert to mocap, such as dance videos.

I also did not know you could create mocaps with the Quest 2. I'm still not clear on the process. Are you supposed to possess a model and move them around as you would in real life, recording it? In that case what are you doing, making fucking motions into the air... or another person?

I wish someone could make a tutorial video on how to use the Quest 2 for mocap in VaM.

Last edited:

ajtrader23

Active member

I just posted about this subject in another thread where I'm having trouble with the .bvh files both Deep Motion and Plask.ai generate. Neither play with BVH Player in VaM although I have other .bvh files downloaded elsewhere that play fine.

But I can say that Deep Motion could be a very useful tool for making poses if we can get it to work. You get 30 free seconds/credits per month. I would not use it for mocap of a 2D video to 3D figure, I think Plask.ai is better for that. But Deep Motion lets you import a 2D photo and turn it into a 3D figure and this works pretty good. It only costs one credit/second per photo so you can do 30 poses a month for free. A good source for full body model poses is at www.fineart.sk

Plask.ai is what I've been playing with for 2D video to 3D figure mocap. You only get a limited number of free uses per month, I think it's around 30 seconds. But if you do this a lot it might be worth it. I found a good source for 2D full body videos that have a solid color background (white, black or green) are on stock footage sites like Shutterstock. You can download their preview videos and import these into Plask, the watermark on the file doesn't seem to impact the final animation. The final 3D mocap appears to be good quality although like I said I have not been able to check this with a VaM model using the BVH Player plugin. YouTube is also a good source for videos to convert to mocap, such as dance videos.

I also did not know you could create mocaps with the Quest 2. I'm still not clear on the process. Are you supposed to possess a model and move them around as you would in real life, recording it? In that case what are you doing, making fucking motions into the air... or another person?

I wish someone could make a tutorial video on how to use the Quest 2 for mocap in VaM.

Thanks for this. Have you tried bringing in a 2D porn video into 3D?

As for Quest2 MoCap - you're just recording movements of the various anchors, so you can do it by hand or possess or whatever. It's all manual.

There is the free mocap project, but it's really designed for capturing your own pre-prepared videos. It runs with multiple cameras and uses giant QR code boards to align and calibrate the camera positions.

freemocap.org

freemocap.org

Also there are realtime mocap solutions that work with the MSKinect. I have a setup which works well. I am looking to expand to a multi-kinect setup but sadly that means buying another software licence.

Home | The FreeMoCap Project

The Free Motion Capture Project (FreeMoCap) aims to provide research-grade markerless motion capture software to everyone for free.

Also there are realtime mocap solutions that work with the MSKinect. I have a setup which works well. I am looking to expand to a multi-kinect setup but sadly that means buying another software licence.

Jocks3D

Active member

Have you tried bringing in a 2D porn video into 3D?

Videos with one person full body in the shot on a solid color background like green or white seem to work best with these online mocap programs. I'm not aware of any solo porn videos on a solid color background but if you know of one, in theory it should work. Try it out and let us know!

yasparukko

Active member

I think all of these ai mocap solutions are only good for very basic motions at this moment, and the resulting BVH file will most likely not be using Genesis 2 skeleton//bone names, so you would have to retarget it to Genesis 2 body for it to work with VAM, so its just not worth the hassle

Jocks3D

Active member

the resulting BVH file will most likely not be using Genesis 2 skeleton//bone names, so you would have to retarget it to Genesis 2 body for it to work with VAM

That must be the reason I can't get them to work with BVH player in VaM. That's a shame because I think they would be useful. I tried one with a 30 second clip of a hip hop dancer and it came out very well. Just can't use it in VaM.

Jocks3D

Active member

For anyone interested, I found that the solution is to export a Daz3D Gen 2 figure into Deep Motion and use that for the video to mocap animation, then export it as a bvh back into Daz, then export a bvh one more time that can be played in VaM. There is a good video about this on YouTube, search Deep Motion to Daz3D.

ajtrader23

Active member

has anyone successfully created a VAM mocap generated from a 2D traditional video? That would be the holy grail of this - imagine revisiting your favorite scenes in vr.

Jocks3D

Active member

has anyone successfully created a VAM mocap generated from a 2D traditional video?

Yes, I've made a couple dance videos using Deep Motion and the technique described above. But there are many "rules" you need to follow, such as the person in the 2D video must be full frame head to toe and facing the camera with no camera movement.

I assume you're talking about converting 2D video sex scenes to mocap and that technology is not possible at this time (that I know of).

anistart888

New member

Maybe this is a dumb idea, but has anyone here tried using kinect tracking for mocap? Wonder if it could pick up movement from another screen...

That must be the reason I can't get them to work with BVH player in VaM. That's a shame because I think they would be useful. I tried one with a 30 second clip of a hip hop dancer and it came out very well. Just can't use it in VaM.

I tried this too. No way would VAM play the BVH file.

My rule of thumb, if you have to mess around converting files and ticking stuff like Unity or DAZ boxes them messing some more with code and tick boxes, then best wait until someone make a pipeline plugin that does it all for you. I find it an intensely frustrating and irritating waste of my time, however some folks enjoy doing this kind of stuff.

Jocks3D

Active member

My rule of thumb, if you have to mess around converting files and ticking stuff like Unity or DAZ boxes them messing some more with code and tick boxes, then best wait until someone make a pipeline plugin that does it all for you.

I felt the same way. Have been trying several methods and the steps you need to go through are quite time consuming. So far Deep Motion seems to take the least amount of time so they're my first choice at the moment.

There are some people online who use the Kinnect method but I have not tried it yet. Seems like I might get a similar result just using the built in possess mocap feature of VaM. I heard Sony is coming out with a cheap mocap solution in 2023 so that's something to keep an eye on.

I felt the same way. Have been trying several methods and the steps you need to go through are quite time consuming. So far Deep Motion seems to take the least amount of time so they're my first choice at the moment.

There are some people online who use the Kinnect method but I have not tried it yet. Seems like I might get a similar result just using the built in possess mocap feature of VaM. I heard Sony is coming out with a cheap mocap solution in 2023 so that's something to keep an eye on.

Maybe we are too lazy to learn to use Acid Bubbles' excellent Timeline properly. I'm sure if we did, we'd get similar results and there's no of that brain-painful fill out forty check boxes before you export from one app/game engine to another. My god, I once exported a model from Daz into to Unity for lipsysnc then set up the lipsync for it! What a performance! A whole week of watching 14 videos plus an exchange of how-to PMs to the lipsync developer, etc. VAM's Adamant's lipsync does exactly the same and none of the stupid faff!

I'd love to make clothing using Marvellous Designer, good female clothes for futa models, butI simply can't be bothered to import from MD to Daz, then to Blender, then to VAM. It's not worth the payoff.

ajtrader23

Active member

Maybe we are too lazy to learn to use Acid Bubbles' excellent Timeline properly. I'm sure if we did, we'd get similar results and there's no of that brain-painful fill out forty check boxes before you export from one app/game engine to another. My god, I once exported a model from Daz into to Unity for lipsysnc then set up the lipsync for it! What a performance! A whole week of watching 14 videos plus an exchange of how-to PMs to the lipsync developer, etc. VAM's Adamant's lipsync does exactly the same and none of the stupid faff!

I'd love to make clothing using Marvellous Designer, good female clothes for futa models, butI simply can't be bothered to import from MD to Daz, then to Blender, then to VAM. It's not worth the payoff.

Are you suggesting... to manually edit a timeline animation in sync with a video? Or are you saying that timeline somehow has the ability to capture motion from a 2d source?

Are you suggesting... to manually edit a timeline animation in sync with a video? Or are you saying that timeline somehow has the ability to capture motion from a 2d source?

Er, nope, none of the above. Pretty sure of that.

You can watch Acid Bubbles' videos on how to use it if you're curious enough. They are quite easy to follow. I'm just too lazy to make manual animations/expressions in the TL.

I happily pay this (brilliant) VAM developer $5.00 pm to make similar stuff for me all his other paetrons , rather than spending a whole month using the TL to do it for myself. I DON'T like coding, and similar faffing about. So modest, this guy, he refuses to describe himself as a 'developer'. Quiet genius at work.

Last edited:

Similar threads

- Replies

- 3

- Views

- 568