Hi, I'm DarkChris - a Blender animator and programmer with a lot of experience converting MMD animation using Python scripts.

While I'm very impressed with VAM's abilities, I've noticed the MMD options don't have facial expression and that simply won't do for me. I've noticed that a few years ago CraftyMoment had a decent script to translate it using python:

github.com

github.com

Using his approach as a starting point, I've already corrected a few joint rotations, and tweaked the physics. (I've posted 2 of my test conversions in Free Scenes to demonstrate the progress)

hub.virtamate.com

hub.virtamate.com

hub.virtamate.com

hub.virtamate.com

And now I've found the import scripts we used for Blender to get facial expressions:

github.com

github.com

Progress updates and more demos to be added shortly.

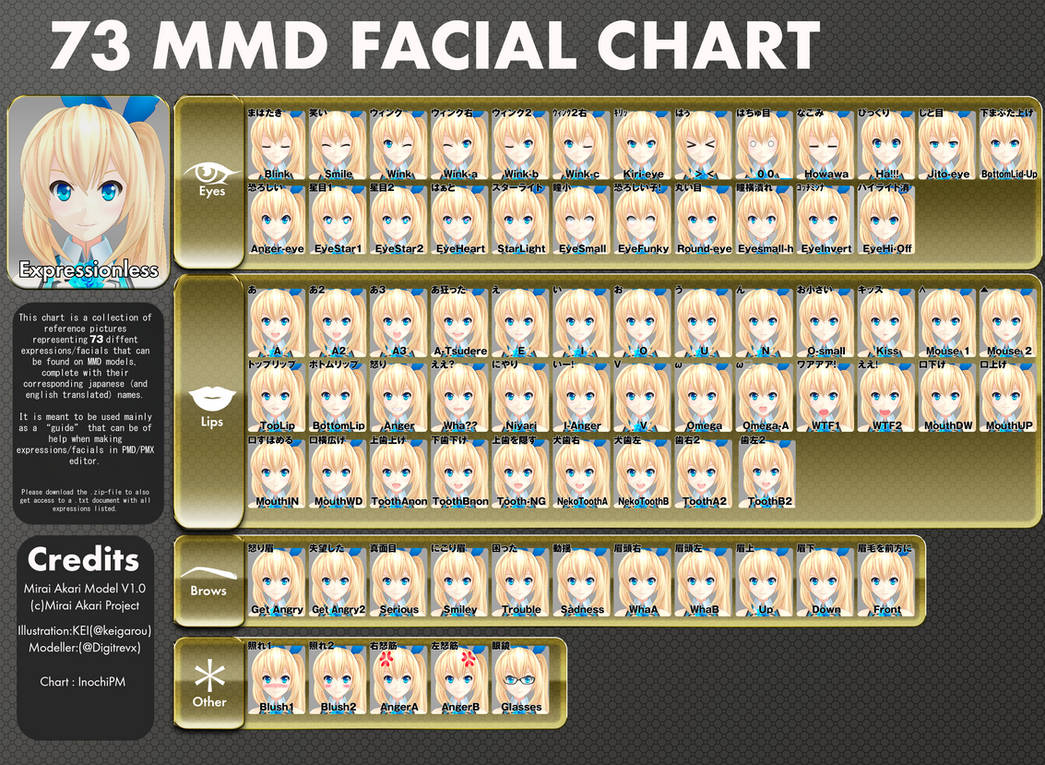

While I'm very impressed with VAM's abilities, I've noticed the MMD options don't have facial expression and that simply won't do for me. I've noticed that a few years ago CraftyMoment had a decent script to translate it using python:

GitHub - CraftyMoment/mmd_vam_import: Utility for importing MMD motion files (*.vmd) to VAM

Utility for importing MMD motion files (*.vmd) to VAM - CraftyMoment/mmd_vam_import

Using his approach as a starting point, I've already corrected a few joint rotations, and tweaked the physics. (I've posted 2 of my test conversions in Free Scenes to demonstrate the progress)

MMD Test: Lewd Movements - Scenes -

This is a collection of lewd animations from an MMD conversion. There are several sections that can be used for projects. You should be able to use whatever look you want, but be careful of using collision or changing the joint physics. MMD...

Gokuraku Jodo MMD Test - Scenes -

This is a test conversion of one of my favorite MMD's for alignment testing: Gokuraku Jodo. It has very precise arm and feet movement, so it can be useful in visual identification of how well an MMD conversion process is going. It's also an...

And now I've found the import scripts we used for Blender to get facial expressions:

pymeshio/pymeshio/vmd/reader.py at master · ousttrue/pymeshio

3d model reader/writer for python. Contribute to ousttrue/pymeshio development by creating an account on GitHub.

Progress updates and more demos to be added shortly.