-

Hi Guest!

We are extremely excited to announce the release of our first Beta1.1 and the first release of our Public AddonKit!

To participate in the Beta, a subscription to the Entertainer or Creator Tier is required. For access to the Public AddonKit you must be a Creator tier member. Once subscribed, download instructions can be found here.

Click here for information and guides regarding the VaM2 beta. Join our Discord server for more announcements and community discussion about VaM2. -

Hi Guest!

VaM2 Resource Categories have now been added to the Hub! For information on posting VaM2 resources and details about VaM2 related changes to our Community Forums, please see our official announcement here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Benchmark Result Discussion

- Thread starter MacGruber

- Start date

Hi guys, could you please help me how to set AMDx7950X3D platform to work best with VAM on windows 10 and 4090 ASUS.

Ive set XMP profile for ram ( 2x 32 gskilz neo). but how to set VAM to utileze best cores with 3D cache? is there anything else in bios ( iv Gigabyte Aorus Master) to set?

Ive set XMP profile for ram ( 2x 32 gskilz neo). but how to set VAM to utileze best cores with 3D cache? is there anything else in bios ( iv Gigabyte Aorus Master) to set?

7500F,can't win 5600X 3600X(with 6800XT) TAT

Desktop:

Automatic GPU OC via MSI Afterburner

View attachment 154495

Oculus 2 via AirLink

Preparations:

I manually set headset refresh rate to 120 HZ (to avoid VSync problem MacGruber mentioned).

I also manually set render resolution to be fixed, It would change in between benchmarks and would cause problems in modded rendering API (see Using OpenXR + vrperfkit below).

View attachment 154483

Baseline:

It looks like GPU bottleneck for me.

View attachment 154482

I tried automatic GPU and CPU OC with MSI afterburner and AMD Ryzen Master.

GPU overclock was helpful but not enough to justify another benchmark.

CPU overclock actually gave me worse result. I thin it it because it set higher than default but constant clock on all cores, while (as I understand) VaM is more single threaded and automatic turbo boost manages better clocks on various threads - much more clock bump for cores used by VaM.

To further optimize my game performance I followed this video:I had some problems with vrperfkit, more about that below.

Using OpenXR adapter with Per-game installation option:

This adapter bypass OpenVr API, so games can run without Steam VR, which gives substantial performance boost in most games that use Oculus runtime.

I had some problems running the game, changing content ofVaM (OpenVR).battoSTART "VaM" VaM.exe -vrmode OpenXRseems to fix the problem, but I don't know whether it actually does something or not.

I placed API dll file inVaM_Data\Plugins. I recombed keeping the original file as copy.

It gave me additional fps boost:

- about + 15 FPS on average from baseline

- in most scenes it was more like + 35 FPS from baseline

View attachment 154484

Using OpenXR + vrperfkit:

I used FFR (Fixed foveated rendering) and upscaling (in this case FSR).

I found that it is required to set fixed rendering resolution in you headset settings as well as to set proper renderScale in upscaling config. When renderScale was set to wrong fraction I couldn't run the game. The engine was unable to allocate rendering buffer.

I used Release 3 and unpacked files in the same folder as VaM.exe (should be the same location as VaM_Updater.exe)

It gave me additional fps boost:

There is one drawback, with my current upscaling settings most of the text is unreadable. The gameplay is fine though. I'm pretty sure it can be better with proper calibration of renderScale and sharpness.

- about + 35 FPS on average from baseline

- in most scenes it was more like + 60 FPS from baseline

View attachment 154485

My vrperfkit.yml configuration:

YAML:# Upscaling: render the game at a lower resolution (thus saving performance), # then upscale the image to the target resolution to regain some of the lost # visual fidelity. upscaling: # enable (true) or disable (false) upscaling enabled: true # method to use for upscaling. Available options (all of them work on all GPUs): # - fsr (AMD FidelityFX Super Resolution) # - nis (NVIDIA Image Scaling) # - cas (AMD FidelityFX Contrast Adaptive Sharpening) method: fsr # control how much the render resolution is lowered. The renderScale factor is # applied to both width and height. So if renderScale is set to 0.5 and you # have a resolution of 2000x2000 configured in SteamVR, the resulting render # resolution is 1000x1000. # NOTE: this is different from how render scale works in SteamVR! A SteamVR # render scale of 0.5 would be equivalent to renderScale 0.707 in this mod! renderScale: 0.7 # configure how much the image is sharpened during upscaling. # This parameter works differently for each of the upscaling methods, so you # will have to tweak it after you have chosen your preferred upscaling method. sharpness: 0 # Performance optimization: only apply the (more expensive) upscaling method # to an inner area of the rendered image and use cheaper bilinear sampling on # the rest of the image. The radius parameter determines how large the area # with the more expensive upscaling is. Upscaling happens within a circle # centered at the projection centre of the eyes. You can use debugMode (below) # to visualize the size of the circle. # Note: to disable this optimization entirely, choose an arbitrary high value # (e.g. 100) for the radius. radius: 0.75 # when enables, applies a MIP bias to texture sampling in the game. This will # make the game treat texture lookups as if it were rendering at the higher # target resolution, which can improve image quality a little bit. However, # it can also cause render artifacts in rare circumstances. So if you experience # issues, you may want to turn this off. applyMipBias: true # Fixed foveated rendering: continue rendering the center of the image at full # resolution, but drop the resolution when going to the edges of the image. # There are four rings whose radii you can configure below. The inner ring/circle # is the area that's rendered at full resolution and reaches from the center to innerRadius. # The second ring reaches from innerRadius to midRadius and is rendered at half resolution. # The third ring reaches from midRadius to outerRadius and is rendered at 1/4th resolution. # The final fourth ring reaches from outerRadius to the edges of the image and is rendered # at 1/16th resolution. # Fixed foveated rendering is achieved with Variable Rate Shading. This technique is only # available on NVIDIA RTX and GTX 16xx cards. fixedFoveated: # enable (true) or disable (false) fixed foveated rendering enabled: true # configure the end of the inner circle, which is the area that will be rendered at full resolution innerRadius: 0.4 # configure the end of the second ring, which will be rendered at half resolution midRadius: 0.55 # configure the end of the third ring, which will be rendered at 1/4th resolution outerRadius: 1.0 # the remainder of the image will be rendered at 1/16th resolution # when reducing resolution, prefer to keep horizontal (true) or vertical (false) resolution? favorHorizontal: true # when applying fixed foveated rendering, vrperfkit will do its best to guess when the game # is rendering which eye to apply a proper foveation mask. # However, for some games the default guess may be wrong. In such instances, you can uncomment # and use the following option to change the order of rendering. # Use letters L (left), R (right) or S (skip) to mark the order in which the game renders to the # left or right eye, or skip a render target entirely. #overrideSingleEyeOrder: LRLRLR # Enabling debugMode will visualize the radius to which upscaling is applied (see above). # It will also output additional log messages and regularly report how much GPU frame time # the post-processing costs. debugMode: true # Hotkeys allow you to modify certain settings of the mod on the fly, which is useful # for direct comparsions inside the headset. Note that any changes you make via hotkeys # are not currently persisted in the config file and will reset to the values in the # config file when you next launch the game. hotkeys: # enable or disable hotkeys; if they cause conflicts with ingame hotkeys, you can either # configure them to different keys or just turn them off enabled: true # toggles debugMode toggleDebugMode: ["ctrl", "f1"] # cycle through the available upscaling methods cycleUpscalingMethod: ["ctrl", "f2"] # increase the upscaling circle's radius (see above) by 0.05 increaseUpscalingRadius: ["ctrl", "f3"] # decrease the upscaling circle's radius (see above) by 0.05 decreaseUpscalingRadius: ["ctrl", "f4"] # increase the upscaling sharpness (see above) by 0.05 increaseUpscalingSharpness: ["ctrl", "f5"] # decrease the upscaling sharpness (see above) by 0.05 decreaseUpscalingSharpness: ["ctrl", "f6"] # toggle the application of MIP bias (see above) toggleUpscalingApplyMipBias: ["ctrl", "f7"] # take a screen grab of the final (post-processed, upscaled) image. # The screen grab is stored as a dds file next to the DLL. captureOutput: ["ctrl", "f8"] # toggle fixed foveated rendering toggleFixedFoveated: ["alt", "f1"] # toggle if you want to prefer horizontal or vertical resolution toggleFFRFavorHorizontal: ["alt", "f2"]

Well there is not much difference between 2080 Ti and 3090 Ti :C

I think I'm CPU limited. As I know VAM uses only one or two cores for phisics.

I ran the VR test without vrpeftkit because it caused a lot of visual artifacts. I also used the bat file method to run VAM directly from VirtualDesktop streamer.

Try turning of SMT (hyperthreading) and see what that gives you. with that processor you should be getting a bit more than that. Btw - baseline 3 is the one to look for that, that's the one that shows the CPU bottleneckWell there is not much difference between 2080 Ti and 3090 Ti :C

I think I'm CPU limited. As I know VAM uses only one or two cores for phisics.

I ran the VR test without vrpeftkit because it caused a lot of visual artifacts. I also used the bat file method to run VAM directly from VirtualDesktop streamer.

View attachment 284833

View attachment 284834

Well there is not much difference between 2080 Ti and 3090 Ti :C

I think I'm CPU limited. As I know VAM uses only one or two cores for phisics.

I ran the VR test without vrpeftkit because it caused a lot of visual artifacts. I also used the bat file method to run VAM directly from VirtualDesktop streamer.

View attachment 284833

View attachment 284834

Try turning of SMT (hyperthreading) and see what that gives you. with that processor you should be getting a bit more than that. Btw - baseline 3 is the one to look for that, that's the one that shows the CPU bottleneck

I tried different CPU configurations, disabling SMT made things worse.

I had some success by leaving only 2 cores enabled with PBO. Doing Auto Overclocking and manual overclocking brought lesser results.

I tried different core configurations, I managed to overclock them to over 5 GHz:

Finally the best result gave turning on Game Mode profile with PBO + slight GPU overclock.

I landed on these results:

It seems that I can not push that any further, maybe someone can give me some tips.

In VR things look like this:

Which does not seem to be playable, but please note the resolution. Everything is so crisp ?. Also by enabling frames generation in Virtual Desktop I perceive buttery smooth 120 FPS for most of the time.

Attachments

Damn how'd you get that physics score? What's your current mobo+ram settings?tweaked the RAM

View attachment 242329

Attachments

The 7800x3d makes it easy. Some tightened timings in ram, and PBO on with curve optimizer -40 on mine. But looking at yours, You v-sync is on somewhere in your settings. Your fps is locked hovering low around 120 bottlenecking all your timings.Damn how'd you get that physics score? What's your current mobo+ram settings?

Attachments

Last edited:

I encountered a problem and could not use the benchmark. When I reached a certain scene, the Menu (hidden under the F1 key) suddenly popped up, causing the benchmark process to end immediately. Does anyone know why?

Probably some error caused by a defective Var file or plugin. its known best to set up a fresh vam installation folder to run the benchmark. My normal vam installation also now freezes in the middle of the benchmark; but benchmark still runs fine on my unused fresh extra install of vam.I encountered a problem and could not use the benchmark. When I reached a certain scene, the Menu (hidden under the F1 key) suddenly popped up, causing the benchmark process to end immediately. Does anyone know why?

I tried different CPU configurations, disabling SMT made things worse.

I had some success by leaving only 2 cores enabled with PBO. Doing Auto Overclocking and manual overclocking brought lesser results.

View attachment 285224

I tried different core configurations, I managed to overclock them to over 5 GHz:

View attachment 285225

View attachment 285226

Finally the best result gave turning on Game Mode profile with PBO + slight GPU overclock.

View attachment 285228

View attachment 285234

I landed on these results:

View attachment 285230

It seems that I can not push that any further, maybe someone can give me some tips.

In VR things look like this:

View attachment 285232

Which does not seem to be playable, but please note the resolution. Everything is so crisp ?. Also by enabling frames generation in Virtual Desktop I perceive buttery smooth 120 FPS for most of the time.

New RAM (2 sticks instead of 4), better CPU cooling. CPU overclocked with PBO and Curve set to -30 on almost all cores.

I tried overclocking RAM (over XMP) and adjusting timings but got worse results:

GPU sits at around 75% utilization, so I'm still CPU limited :C

guys i'm about to make an upgrade on my cpu its not reach yet , but is there any place that i could look for ram's oc ? because with my actuall cpu i can't get 100% of my ram's frequency standard i have to run it lower to boot the system , hope with the new one i could even reach a higher hz than the stock rams has , my actual cpu is an ryzen 7 1700 non X i'm getting an ryzen 7 5800x3d sooner my ram's is corsair LPX 3000mhz (cmk16gx4m2b3000c15) running at 2733 can't get it running more than that with actual setup , i'm pretty newbie with these system/bios tweak's .

The Zen 1 processors had poor memory support. The difference between 3000 and 2733 will be too small to notice changes in frames or physics compared to the boost of the 5800x3d. I upgraded from 16gb 2400 to 32gb 3200 my physics improved by 0.5 but loads faster as the system is using more than 20gb now.guys i'm about to make an upgrade on my cpu its not reach yet , but is there any place that i could look for ram's oc ? because with my actuall cpu i can't get 100% of my ram's frequency standard i have to run it lower to boot the system , hope with the new one i could even reach a higher hz than the stock rams has , my actual cpu is an ryzen 7 1700 non X i'm getting an ryzen 7 5800x3d sooner my ram's is corsair LPX 3000mhz (cmk16gx4m2b3000c15) running at 2733 can't get it running more than that with actual setup , i'm pretty newbie with these system/bios tweak's .

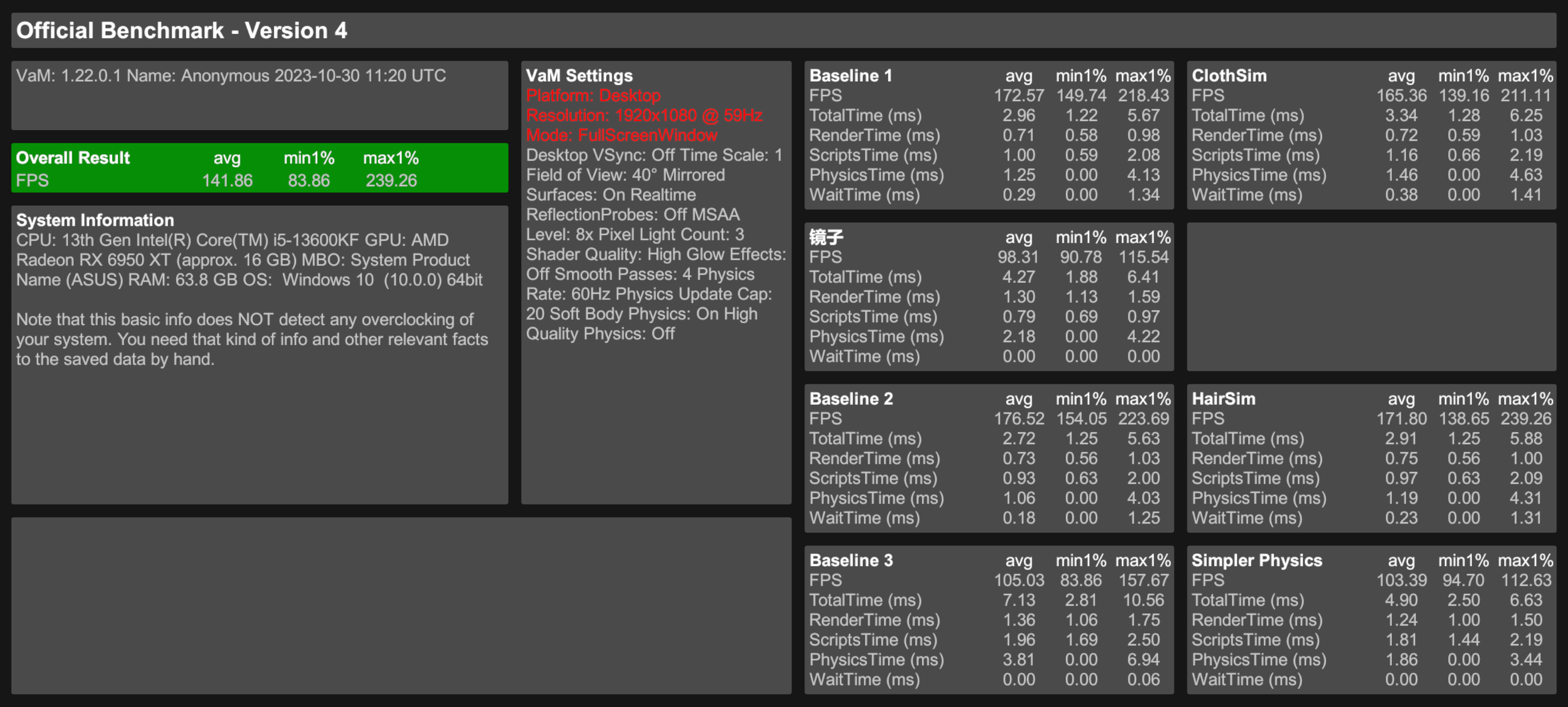

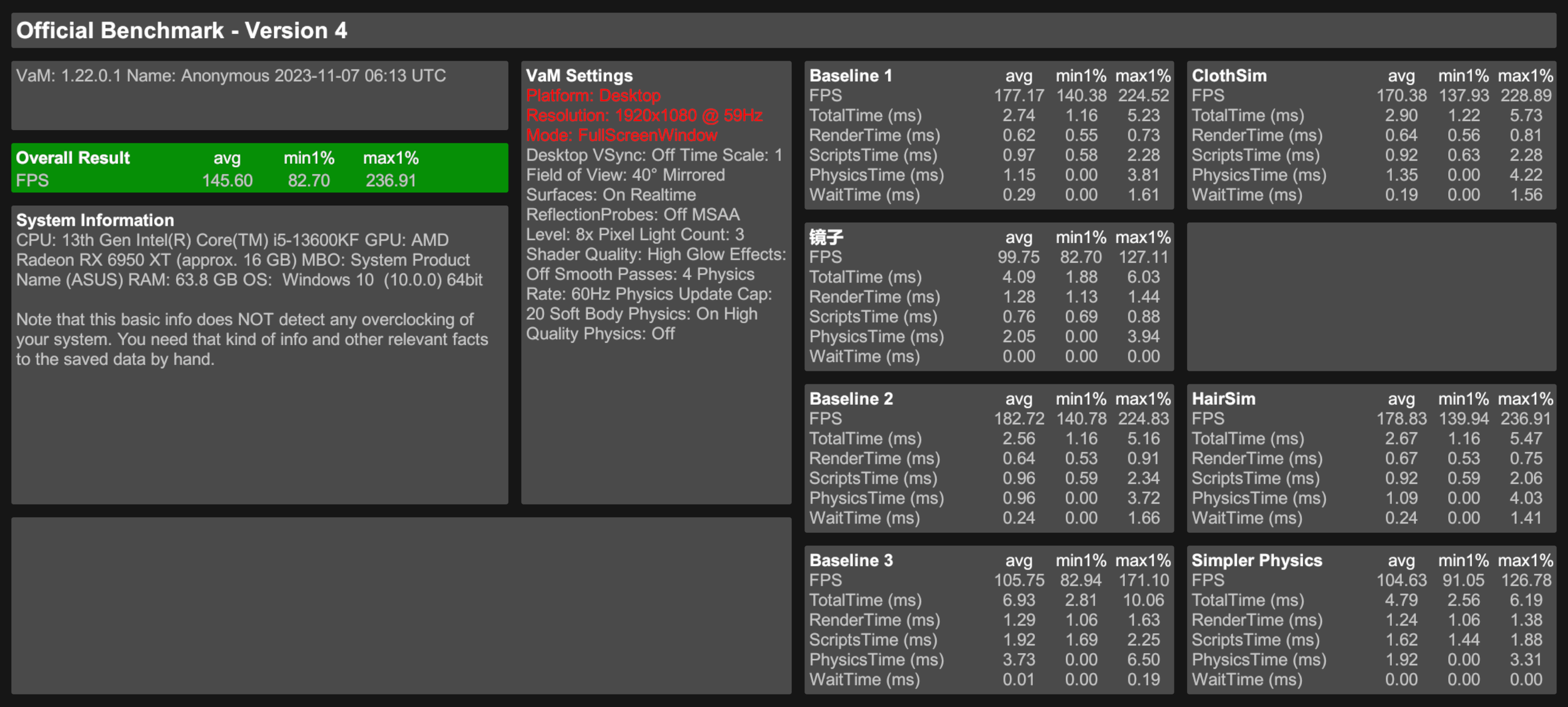

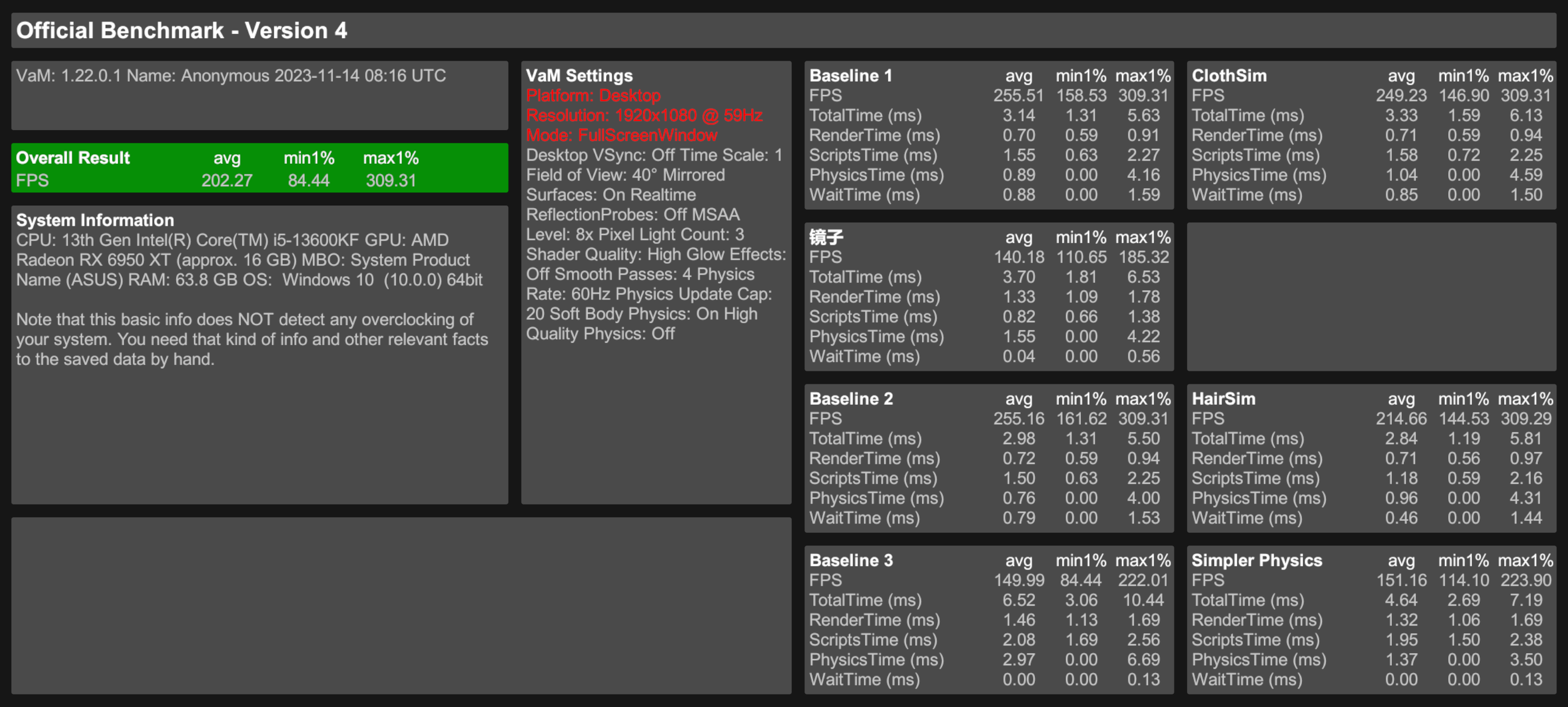

13600kf + 6950xt DDR4-3600

Windows 11

Windows 10

It is said that Windows 11 can better utilize Intel's large and small core performance.

But my tests show that Windows 10 is better than Windows 11.

-----------

8X tesselation thx TToby

hub.virtamate.com

hub.virtamate.com

Windows 11

Windows 10

It is said that Windows 11 can better utilize Intel's large and small core performance.

But my tests show that Windows 10 is better than Windows 11.

-----------

8X tesselation thx TToby

Benchmark Result Discussion

By the way, does someone know if a look, created with a lot of mophs from different var files, will still look as intended, if I turn the morph-preload off? Can a scene or preset load those morphs anyways, or are they completely switched off and/or invisible? I think I have to try this out...

Last edited:

If someone wanted to build a new PC targeting the best hardware for the fastest VAM experience using this data is there a compiled dump or spreadsheet of all these test results? For example, should the best buy be an AMD x3d chip or an intel 13th gen or something else?

No compilation For new chips. 7800X3D and 13900k perform about the same for vam. 7800X3D cost less and uses much less power.If someone wanted to build a new PC targeting the best hardware for the fastest VAM experience using this data is there a compiled dump or spreadsheet of all these test results? For example, should the best buy be an AMD x3d chip or an intel 13th gen or something else?

I can't get the benchmark to work, it says I have a modified version of the Benchmark. So, I re-downloaded a clean Virt A Mate install without any plugins, or any other content, went to the hub store, searched Benchmark, click on download all, load the scene and still the same issue. Running a modified version.

My other VaM install is on a external SSD, which is disconnected from my machine. My fresh install is on my m.2 SSD.

My other VaM install is on a external SSD, which is disconnected from my machine. My fresh install is on my m.2 SSD.

how is in in VR?

Thinking about what gpu to buy

Radeon doesnt work well in Vam compared with Nvidia

and 4070ti is enough but 4080 or 4090 is better

am i correct?

I would go for a Nvidia. Many people would say "AMD has better performance/price ratio" which is kinda true. But overal Nvidia GPU's has way more ray tracing cores and way more L2 cache as AMD's. The GPU architecture of a Nvidia graphics card is just better and more future proof.

You will have a advantage with a Nvidia if you play with the newest technologies. DLSS has also been massively improved and works way better as AMD's FSR.

VaM is running on older technology, so you wont have a massive advantage with a Nvidia over AMD. I haven't tried a AMD yet with VaM, but I went from a GTX 1080 to a RTX 4080, and my performance has significantly increased.

If you also play games like Cyberpunk 2077 and Alan Wake 2, than Nvidia is the way to go if you want to use Path tracing. If not, and only strict for VaM, than maybe AMD would be better.

The 4070 Ti is around 20% slower as a RTX 4080 in benchmarks.

Last edited:

I did some research on this and although I only have a 6800 xt I did not find convincing evidence that nv is in fact better. There are certain areas where radeon lags behind but the raw performance levels are the same as in other games. Where they lag behind mostly is the ease of use (sw advantage), for vr nv has a much better ecosystem also their architecture handles tesselation better probably but all of this you can come around with some tinkering. On the other hand radeons have a huge advantage in vram capacity which simpli can not be countered from nv side (hw disadvantage).Thinking about what gpu to buy

Radeon doesnt work well in Vam compared with Nvidia

and 4070ti is enough but 4080 or 4090 is better

am i correct?

So in my opinion it is less important that amd or nv but more important how much vram you get. 16GB at minimum, but 3090s with 24GB are already pretty cheap used. Or you can check constantly with an overlay how your vram fills up and restart the game..

Thanks for detailed explanation

I read articles that vram and Memory Bus Width is important for VR gaming and 4K,

but on the other hand some articles saying radeon is not suitable for vr gaming because of optimization in VR?

my concern is performance especially in VR mode ,sorry for my insufficient word

4080 is better and 4090 would be best one ,i know well, but i have 2K 144hz monitor and 4070 ti is ideal

so 4080 is excessive for non-vr gaming even like cyberpunk or hogwarts legacy

thats why im reluctant to buy 4080

Considering balance between performance and cost ,

i think its better to buy 4070ti and in one or two yeas to sell it and buy 50xx series

othewise should i wait for 4070ti super? it is just a rumor

I read articles that vram and Memory Bus Width is important for VR gaming and 4K,

but on the other hand some articles saying radeon is not suitable for vr gaming because of optimization in VR?

my concern is performance especially in VR mode ,sorry for my insufficient word

4080 is better and 4090 would be best one ,i know well, but i have 2K 144hz monitor and 4070 ti is ideal

so 4080 is excessive for non-vr gaming even like cyberpunk or hogwarts legacy

thats why im reluctant to buy 4080

Considering balance between performance and cost ,

i think its better to buy 4070ti and in one or two yeas to sell it and buy 50xx series

othewise should i wait for 4070ti super? it is just a rumor

Last edited:

Thanks for detailed explanation

I read articles that vram and Memory Bus Width is important for VR gaming and 4K,

but on the other hand some articles saying radeon is not suitable for vr gaming because of optimization in VR?

my concern is performance especially in VR mode ,sorry for my insufficient word

4080 is better and 4090 would be best one ,i know well, but i have 2K 144hz monitor and 4070 ti is ideal

so 4080 is excessive for non-vr gaming even like cyberpunk or hogwarts legacy

thats why im reluctant to buy 4080

Considering balance between performance and cost ,

i think its better to buy 4070ti and in one or two yeas to sell it and buy 50xx series

othewise should i wait for 4070ti super? it is just a rumor

I have now a 14th generation I9 14900kf with a RTX 4080 Ventus OC with 32gb DDR5 on 6000mhz and I get around 90 FPS in Cyberpunk 2077 with DLSS on auto, and ray reconstruction and frame generation turned on. With everything turned to the maximum settings with path tracing enabled in native 3840x2160.

I also do VR gaming, and like to play with the Unreal engine and DAZ3D. Sometimes I was wondering if I should spend another 500 bucks extra and just go for a RTX 4090. I also want to start creating my own content. So, I use it for different tasks and not only for gaming, that's why I went minimum for a RTX 4080. Also because i'm impressed by Path Tracing, and the techniques behind it and also the new techniques they developed over the last few years to get it exactly running on our current hardware, like ReSTIR and NRC. If you don't care about all this, and just want to have raw power than Radeon is just a better choice, better performance/price ratio in return, or indeed a RTX 4070 Ti. The super versions is not enough information about yet.

I got many information from Digital Foundy, such a amazing YouTube channel with so many information.

This video is about how CDPR and Nvidia pulled it off on our current hardware and it's about a little bit about GPU architecture and newest technologies.

Last edited: