There is nothing really wrong with your setup. You are just GPU bottlenecked by your 6800xt. So upgrading you cpu did not do much, because you needed to upgrade your GPU for more gains on your system.Yes maybe, it's true that all "ms" looks better everywhere, but I'm not used to check at this, more used to rely on FPS with other benchmarks.

Will take a closer look to what all these data are meaning before start to investigate if there something wrong on driver/software side.

-

Hi Guest!

We are extremely excited to announce the release of our first Beta1.1 and the first release of our Public AddonKit!

To participate in the Beta, a subscription to the Entertainer or Creator Tier is required. For access to the Public AddonKit you must be a Creator tier member. Once subscribed, download instructions can be found here.

Click here for information and guides regarding the VaM2 beta. Join our Discord server for more announcements and community discussion about VaM2. -

Hi Guest!

VaM2 Resource Categories have now been added to the Hub! For information on posting VaM2 resources and details about VaM2 related changes to our Community Forums, please see our official announcement here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Benchmark Result Discussion

- Thread starter MacGruber

- Start date

Dont really get your point. For better results you need a stronger hw, but yea in any case individual scene optimization is still necessary for best result. If there will be a new engine thats cool, but until then we do what? Waiting and idling not working for many.It's crazy how some think you can handle this by pushing more Hardware into it that gets fully wrong utilized

@ the time VAM came to existence and now even more so

Bruteforcing wont help

Upgrade the GPU on a 3600x that was already limiting my actual one?There is nothing really wrong with your setup. You are just GPU bottlenecked by your 6800xt. So upgrading you cpu did not do much, because you needed to upgrade your GPU for more gains on your system.

I think the actual combo is ok for this gen, I'm just using the bench to ensure everything is running as it should.

I have a the same gpu a 6800 XT with watercool. I can push it beyond 2,7GHz and can get 150 fps avr in this bench, but to be honest you don’t need to. In bone stock settings you can have everything this vam can give. 60 fps dektop or 72 in vr - I assume cuz I dont have a headset - is fine, usually physics goes shit and thats covered as much possible with the X3D.Upgrade the GPU on a 3600x that was already limiting my actual one?

I think the actual combo is ok for this gen, I'm just using the bench to ensure everything is running as it should.

2.7? congrats, mine don't go above 2.5 on custom loop too but still @stockI have a the same gpu a 6800 XT with watercool. I can push it beyond 2,7GHz and can get 150 fps avr in this bench, but to be honest you don’t need to. In bone stock settings you can have everything this vam can give. 60 fps dektop or 72 in vr - I assume cuz I dont have a headset - is fine, usually physics goes shit and thats covered as much possible with the X3D.

In VR it's very demanding, but generally It's possible to max out most of the settings if only keep 2 or 3 light source, 4 to 6 it become a slide show, so overall it's kinda ok.

I just ran a 3DMark, and notice again a slight loss of perf on the graphic side, dont know what to think but it don't look normal to me, at this point it would maybe worth to re install windows and make a fresh driver install but I'd rather avoid it.

Thats within statistical margin of error. Next time could be similarly high withou any change.2.7? congrats, mine don't go above 2.5 on custom loop too but still @stock

In VR it's very demanding, but generally It's possible to max out most of the settings if only keep 2 or 3 light source, 4 to 6 it become a slide show, so overall it's kinda ok.

I just ran a 3DMark, and notice again a slight loss of perf on the graphic side, dont know what to think but it don't look normal to me, at this point it would maybe worth to re install windows and make a fresh driver install but I'd rather avoid it.

View attachment 184181

About VR why dont you try fsr? I saw that there is a way to utilize it.

Oh yes it is4090 is a freakin beast lol

Almost doubled hair-sim performance

CPU went much better too. I didn't OC it, i just turned off HT.

You turned of HT and your CPU performance increased in VaM? You mean because physics time?

Did you do any before and after scene comparison in VaM? So that you know what extra fps this is giving you?

EDIT

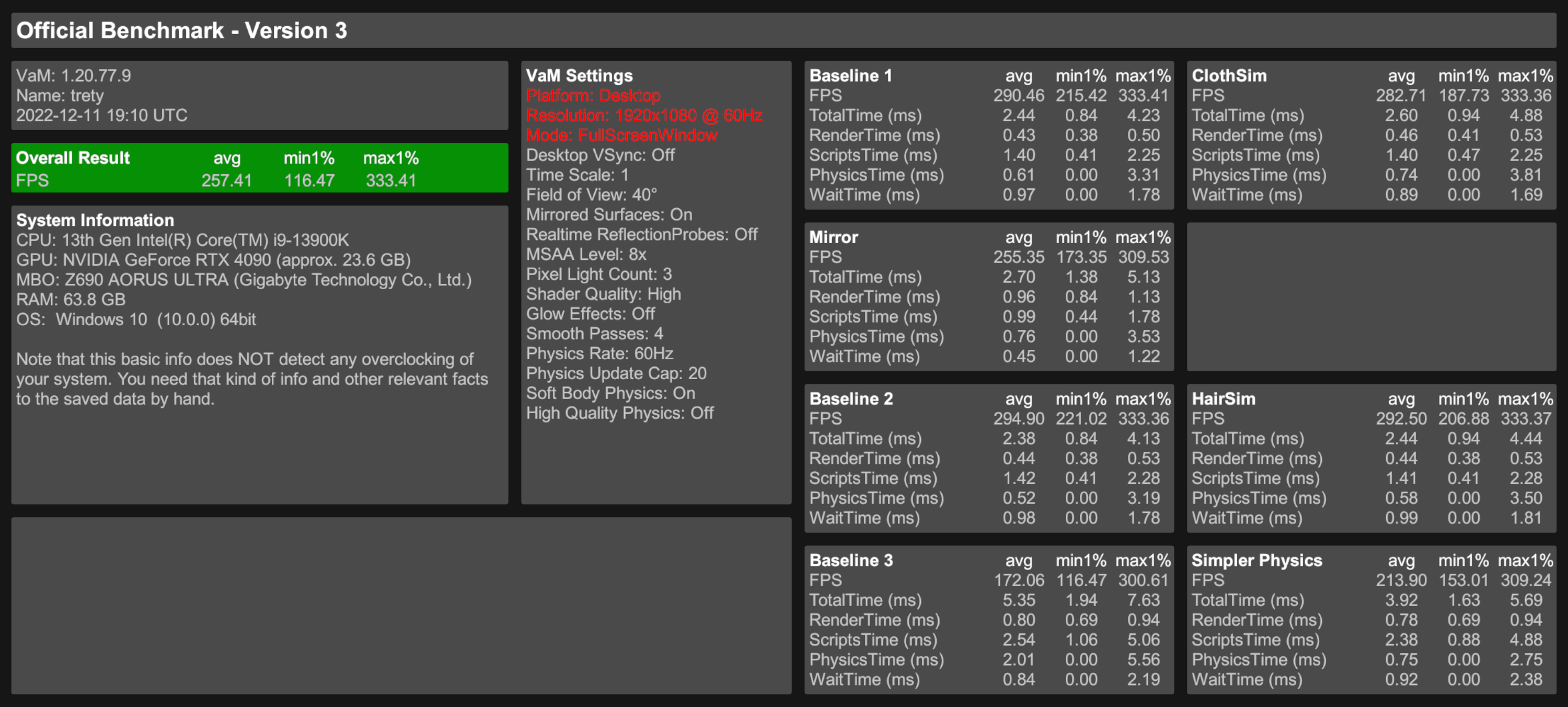

Just did 2 runs, one with HT and one without it. Physics time is indeed better. But still not on your level, what did you do?

with HT

without HT

Last edited:

Oh yes it is

You turned of HT and your CPU performance increased in VaM? You mean because physics time?

Did you do any before and after scene comparison in VaM? So that you know what extra fps this is giving you?

EDIT

Just did 2 runs, one with HT and one without it. Physics time is indeed better. But still not on your level, what did you do?

with HT

View attachment 184668

without HT

View attachment 184669

Yeah i read ages ago that disabling HT might help with Intel CPUs.

I was running 10th gen back then i think, but it seems it's still true

Without HT U got slightly better results

Yours 4090 is air-cooled? FE?

I'm runing both on stock, just with disabled HT and basic XMP profile for DDR 5 RAM. Tho i'm using 4 modules so it's no win-win situation lol.

Benchmark was run on vanillish VAM, only default vars + benchmark installed. Also it's up-to-date - 1.20.77.13. It seems new version is a bit more responsible too, so that might help too.

Also, both CPU and GPU are water cooled.

No, everything watercooled. 4090 TUF OC, CPU and GPU stock. I updated VaM but no real changes. Slightly better with HT on.

2.27ms physics time is where I'm at in Baseline 3 without HT, with HT I'm at 2.58ms. So again: what did you do?

I think it's possible that the reason is RAM: I'm still running my old DDR4 (3200 CL16), 4x8GB.

2.27ms physics time is where I'm at in Baseline 3 without HT, with HT I'm at 2.58ms. So again: what did you do?

I think it's possible that the reason is RAM: I'm still running my old DDR4 (3200 CL16), 4x8GB.

Seems like You running 4 modules too, but DDR4.

So yeah, RAM might be a reason for our different results

I haven't really tested if there is any difference with 2x16 vs 4x16 in VAM, but since 4modules runs at much lower frequency there should be some gap

So yeah, RAM might be a reason for our different results

I haven't really tested if there is any difference with 2x16 vs 4x16 in VAM, but since 4modules runs at much lower frequency there should be some gap

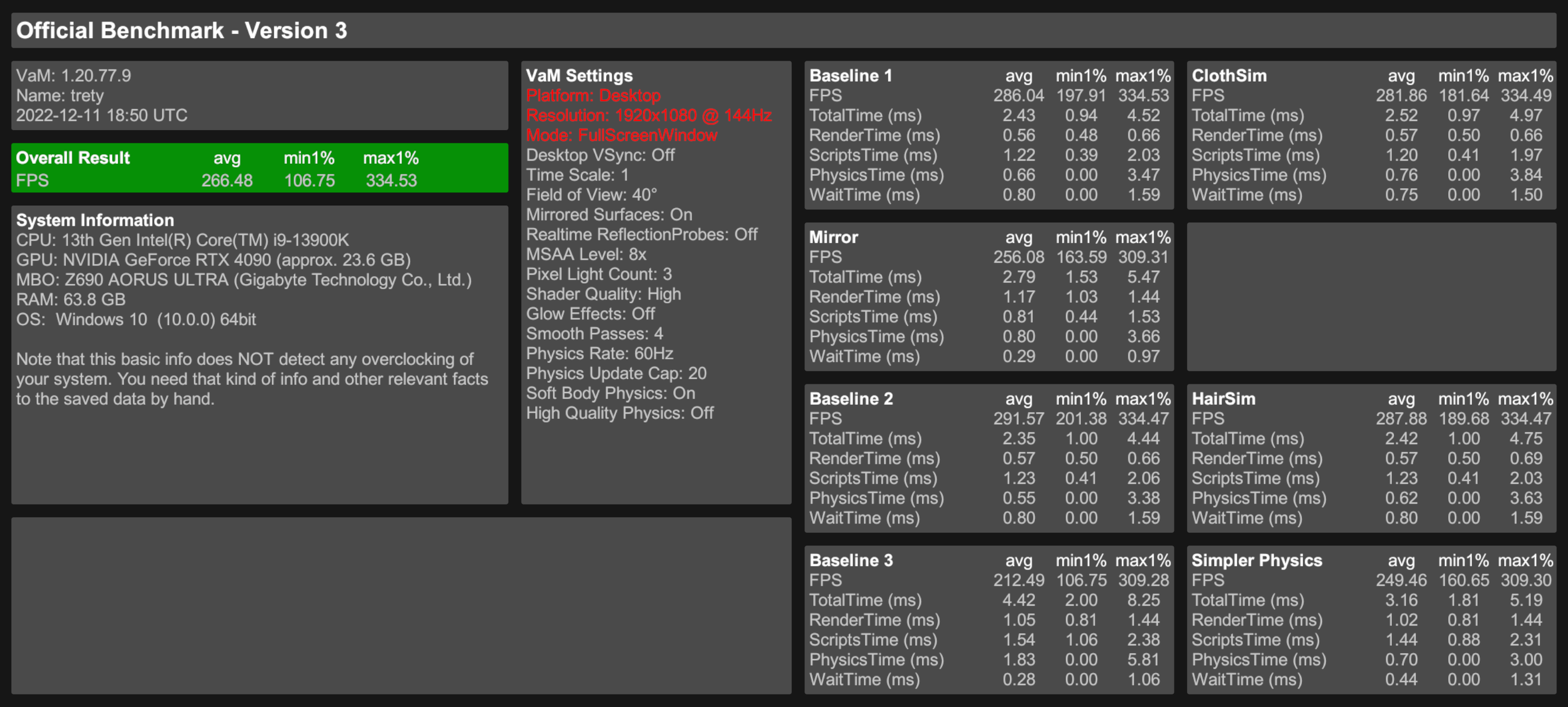

Moving from air-cooled 3090:

View attachment 184450

to water cooled 4090:

View attachment 184451

4090 is a freakin beast lol

Almost doubled hair-sim performance

CPU went much better too. I didn't OC it, i just turned off HT.

How come you didn’t change the CPU at all but your physics time halved in all benchmarks? Overall frame rates are totally dominated by physics time in this benchmark, so that makes a big difference to the increase you noticed in FPS here.

No, everything watercooled. 4090 TUF OC, CPU and GPU stock. I updated VaM but no real changes. Slightly better with HT on.

2.27ms physics time is where I'm at in Baseline 3 without HT, with HT I'm at 2.58ms. So again: what did you do?

I think it's possible that the reason is RAM: I'm still running my old DDR4 (3200 CL16), 4x8GB.

I was planning to do that next year, but since i already spend that much on GPU...

Just changed my RAM modules from 4x16 [DDR5, 5600Mhz running in quad channel - but forgot to check it's real speed before the change] to 2x32 [DDR5, 6000Mhz dual channel].

My PhysicsTimes got reduced again, by tiny bit.

So here it is. My CPU is exactly as Yours [with HT disabled, all P and E cores enabled, no OC, running on Win10] but... Yours is bottlenecked by DDR4 speed, and there are 4 modules too if i recall.

So, that is the reason of ours systems differences.

Last edited:

Thank you! Windows 10 on my side as well. Like I said: it must be the difference between DDR4 and DDR5. Probably the higher Bandwidth as we can see in your comparison which improved with higher frequenzy. Thinking about it, it must be the overall latency time since our physics time is not that much apart. So if I would change mine from 3200 CL16 to 3600 CL14 I could see some benefit in the physics time.

I assume you have like 6000MHz CL40, so that's a latency of 13,33ns. 3200 CL16 is already at 10ns. So no, it's not latency but bandwidth (frequenzy). Or probably a combination of both.

I assume you have like 6000MHz CL40, so that's a latency of 13,33ns. 3200 CL16 is already at 10ns. So no, it's not latency but bandwidth (frequenzy). Or probably a combination of both.

Last edited:

Sorry i'm not good at RAM QQ

i have that one:

www.gskill.com

So it's CL30?

www.gskill.com

So it's CL30?

And.. i don't even know how these 'latencies' affects overall modules performance. I saw DDR5 have higher latencies than DDR4, but also much higher clocks which can recompensate that a bit i guess

i have that one:

F5-6000J3040G32GX2-TZ5RK F5-6000J3040G32GA2-TZ5RK - Overview - G.SKILL International Enterprise Co., Ltd.

Trident Z5 RGB series DDR5 memory is designed for ultra-high performance on DDR5 platforms. Featuring a sleek and streamlined aluminum heatspreader design, available in metallic silver, matte black, or matte white, the Trident Z5 RGB series DDR5 DRAM memory is the ideal choice to build a...

And.. i don't even know how these 'latencies' affects overall modules performance. I saw DDR5 have higher latencies than DDR4, but also much higher clocks which can recompensate that a bit i guess

Correct. So yours have the same latency as mine, 10ns. Here's a calculator if you're interested: https://notkyon.moe/ram-latency.htmSo it's CL30?

F5-6000J3040G32GX2-TZ5RK F5-6000J3040G32GA2-TZ5RK - Overview - G.SKILL International Enterprise Co., Ltd.

Trident Z5 RGB series DDR5 memory is designed for ultra-high performance on DDR5 platforms. Featuring a sleek and streamlined aluminum heatspreader design, available in metallic silver, matte black, or matte white, the Trident Z5 RGB series DDR5 DRAM memory is the ideal choice to build a...www.gskill.com

If you benefit more from frequency (MHz) or CAS-Latency (CL) depends on what software (games, programs) you are running. Some do prefer higher frequency, some do like higher latency. But that's like a mystery to me as well

Not even all games today run faster with DDR5. So the price/performance ratio with DDR5 right now is still not that good overall but it's getting better slowly with lower prices.

In addition, you only really profit from faster RAM if you're CPU bottlenecked, which obviously is often the case within VaM.

If you're GPU bottlenecked anyway, faster RAM doesn't do anything. It's just waiting together with the CPU for the GPU.

Here's a good summary of DDR4 vs DDR5 in 1080p, WQHD and 4K (in games):

This looks like a good overall test as well: https://www.tomshardware.com/features/ddr5-vs-ddr4-is-it-time-to-upgrade-your-ram

Last edited:

I just tried disabling E cores... Results are ... weird.

'normal state'

With E cores disabled.

It's actually went worse without E cores. Dunno, maybe i'm missing some step, like OC for P cores when i disable E, but thought disabling them alone would boost single core performance a bit.

Funny thing i noticed... There is pretty much no difference at all for 4090 if it's 1440p or 1080p lol

'normal state'

With E cores disabled.

It's actually went worse without E cores. Dunno, maybe i'm missing some step, like OC for P cores when i disable E, but thought disabling them alone would boost single core performance a bit.

Funny thing i noticed... There is pretty much no difference at all for 4090 if it's 1440p or 1080p lol

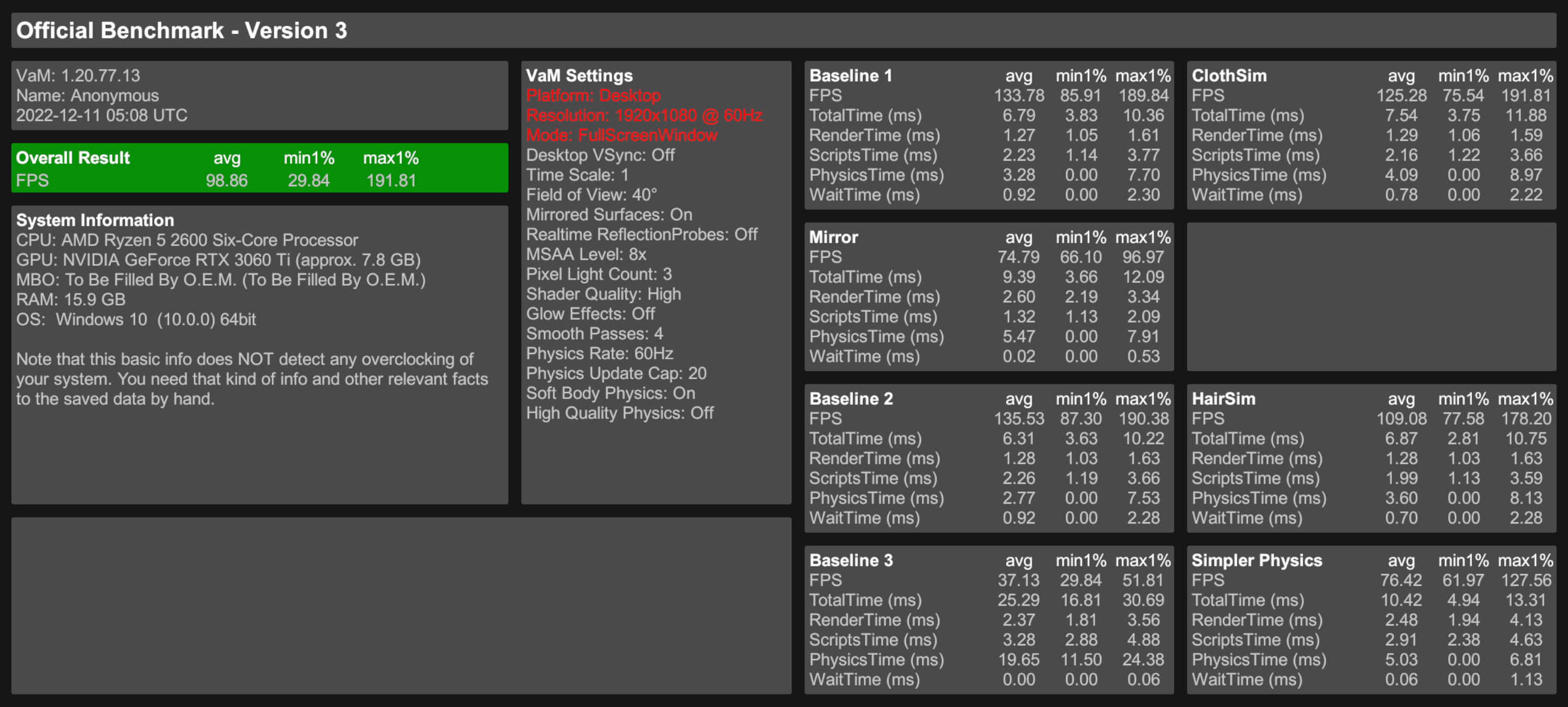

Board is an x370 so I could upgrade to a r5 5600 but I guess 3060ti would still be underwhelming so getting a VR set would be pointless.

I tested on sata and nvme but got the same results, windows is on the sata would that make any difference?

r5 2600 @ 4100

3060 ti mem 7700 core 2055

ddr4 2400

r5 2600 @ stock 3750

1060 +600 mem +75 core

I tested on sata and nvme but got the same results, windows is on the sata would that make any difference?

r5 2600 @ 4100

3060 ti mem 7700 core 2055

ddr4 2400

r5 2600 @ stock 3750

1060 +600 mem +75 core

Yeah I tried this as well long time ago, same as yours. I don't understand neither. Seems like VaM takes advantage of more cores for whatever reason.It's actually went worse without E cores. Dunno, maybe i'm missing some step, like OC for P cores when i disable E, but thought disabling them alone would boost single core performance a bit.

Because with both resolutions you are "bottlenecked" by the engine. You can't push more than around 308fps. And 4090 is powerful enough to be limited even in 1440p.Funny thing i noticed... There is pretty much no difference at all for 4090 if it's 1440p or 1080p lol

I'd say no. SATA already is pretty fast, no real need to move everything to NVMe. It could speed up your loading times for scenes and such.I tested on sata and nvme but got the same results, windows is on the sata would that make any difference?

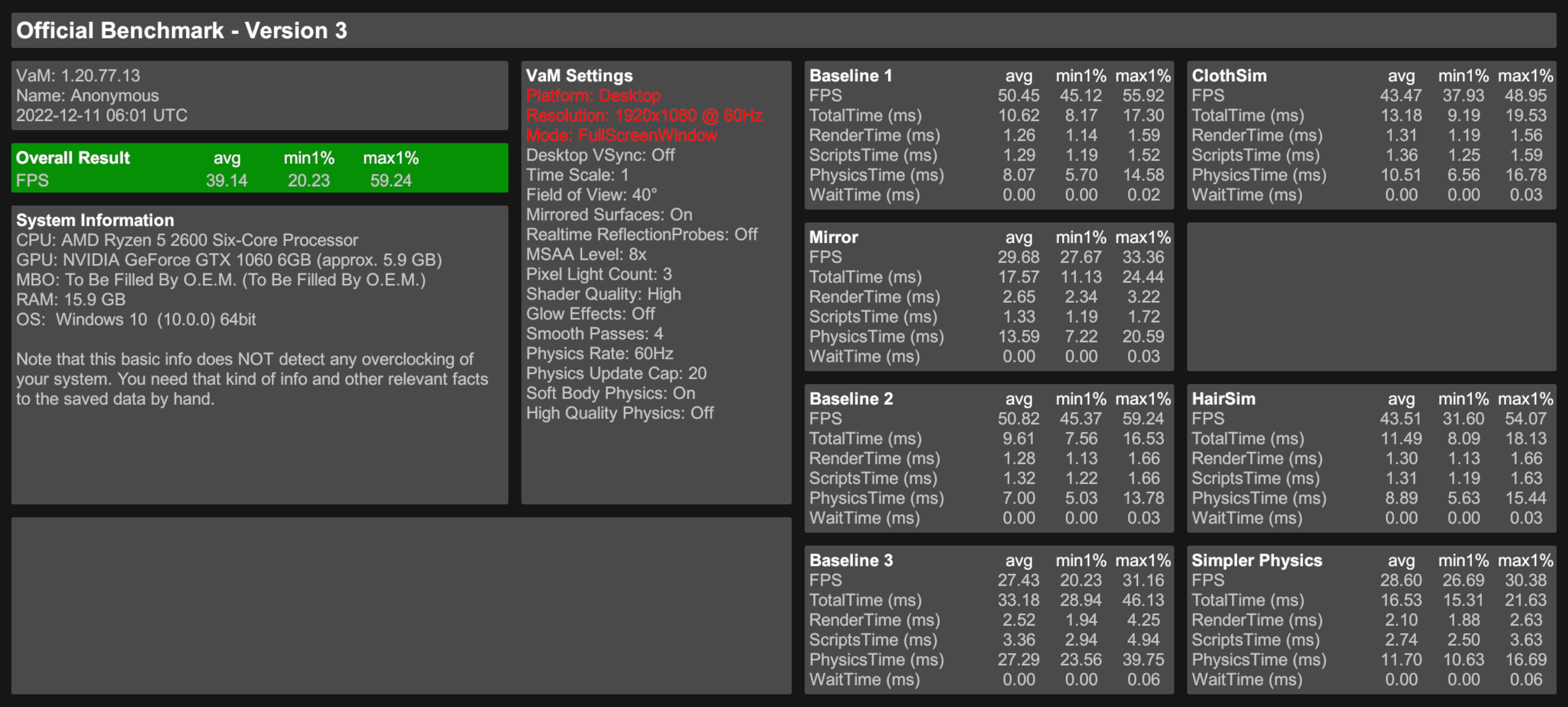

Ryzen 1600 + 6700XT

RAM 2400MHz

Is this Baseline 3 right? 44ms physics time is way too high. I didn't find any other CPU like the 1600 to compare in this thread.

Last edited:

Thats about right for an older cpu:View attachment 186920

Ryzen 1600 + 6700XT

RAM 2400MHz

Is this Baseline 3 right? 44ms physics time is way too high. I didn't find any other CPU like the 1600 to compare in this thread.

Hardware Performance comparison tables

The following tables are sorted results from MacGrubers VAM Benchmark collected here. Updated - 22.April.2022 - I try to avoid my opinion or hardware recommendations here - there is already to much 'brand XY is better'-fanboyism on the internet. It should be clear that one can be happy with...

Thats about right for an older cpu:

Hardware Performance comparison tables

The following tables are sorted results from MacGrubers VAM Benchmark collected here. Updated - 22.April.2022 - I try to avoid my opinion or hardware recommendations here - there is already to much 'brand XY is better'-fanboyism on the internet. It should be clear that one can be happy with...hub.virtamate.com

I believe that's right... I'm on a i5 12600k and a RTX2070 and I think you're getting CPU bound during baseline 3. See my benchmark below.

Whatever physics my cpu can handle for the scene.For the people running 4090 level setups, what physics settings are you using usually?