This is very helpful, thank you!

May I ask if this is also true if I have several versions of the same var? Will the morphs be "applied" once for every version?

To illustrate with an example, if my AddonPackages contain "cotyounoyume.ExpressionBlushingAndTears.37.var" and "cotyounoyume.ExpressionBlushingAndTears.36.var", will all the morphs inside be applied twice (i.e. should I get rid of the older version)?

Hello le_hibou, your Python script has given me some insights. I've been analyzing the impact of the number of .var files in the addonpackages folder on load time and CPU usage efficiency. Your script gave me the idea to count the total number of morphs in all .var files in the entire addonpackages, which is very helpful for my analysis. Perhaps there will be some methods to handle this situation in the future.

Returning to your question, are the morphs in the old .var files in the addonpackages folder monitored or pre-loaded when VAM is running? From my personal tests, I believe the answer is yes.

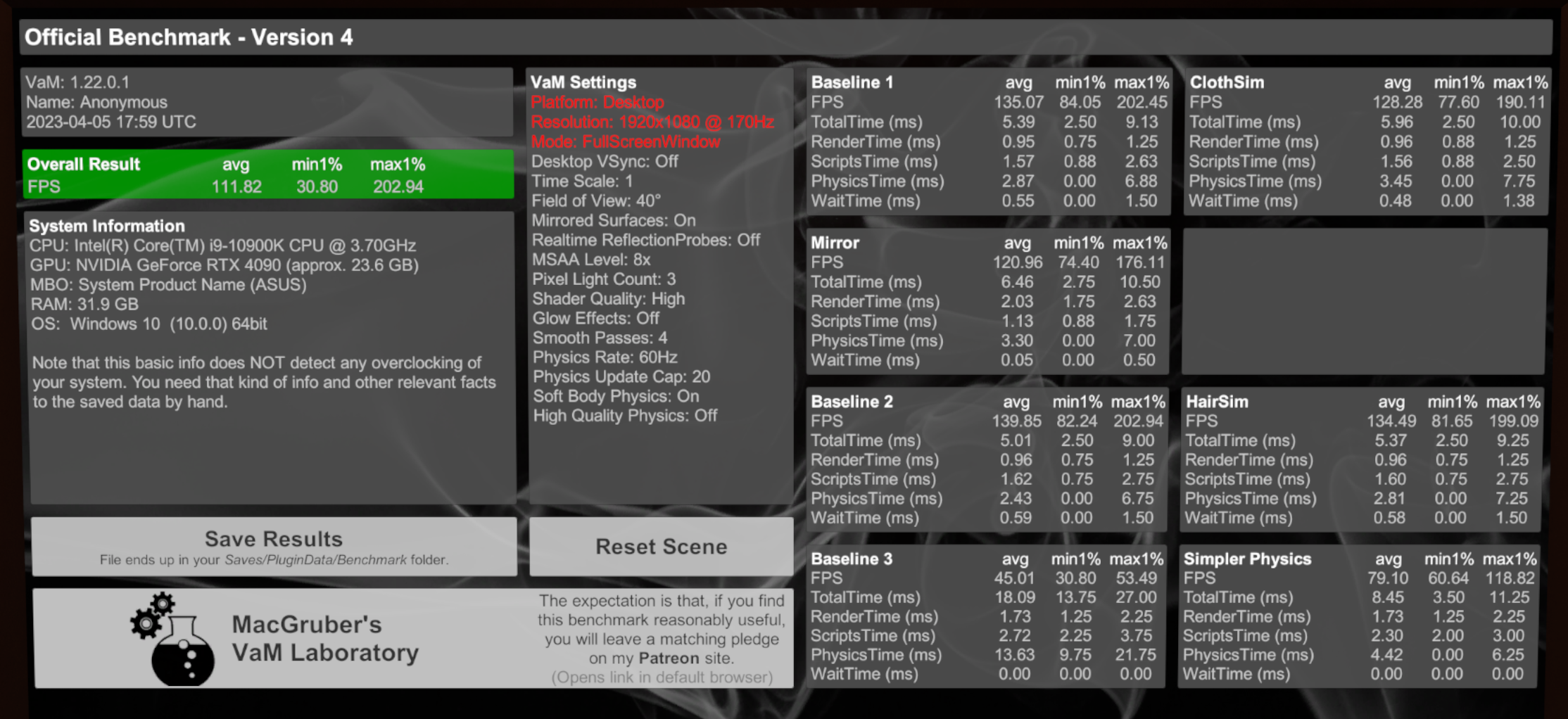

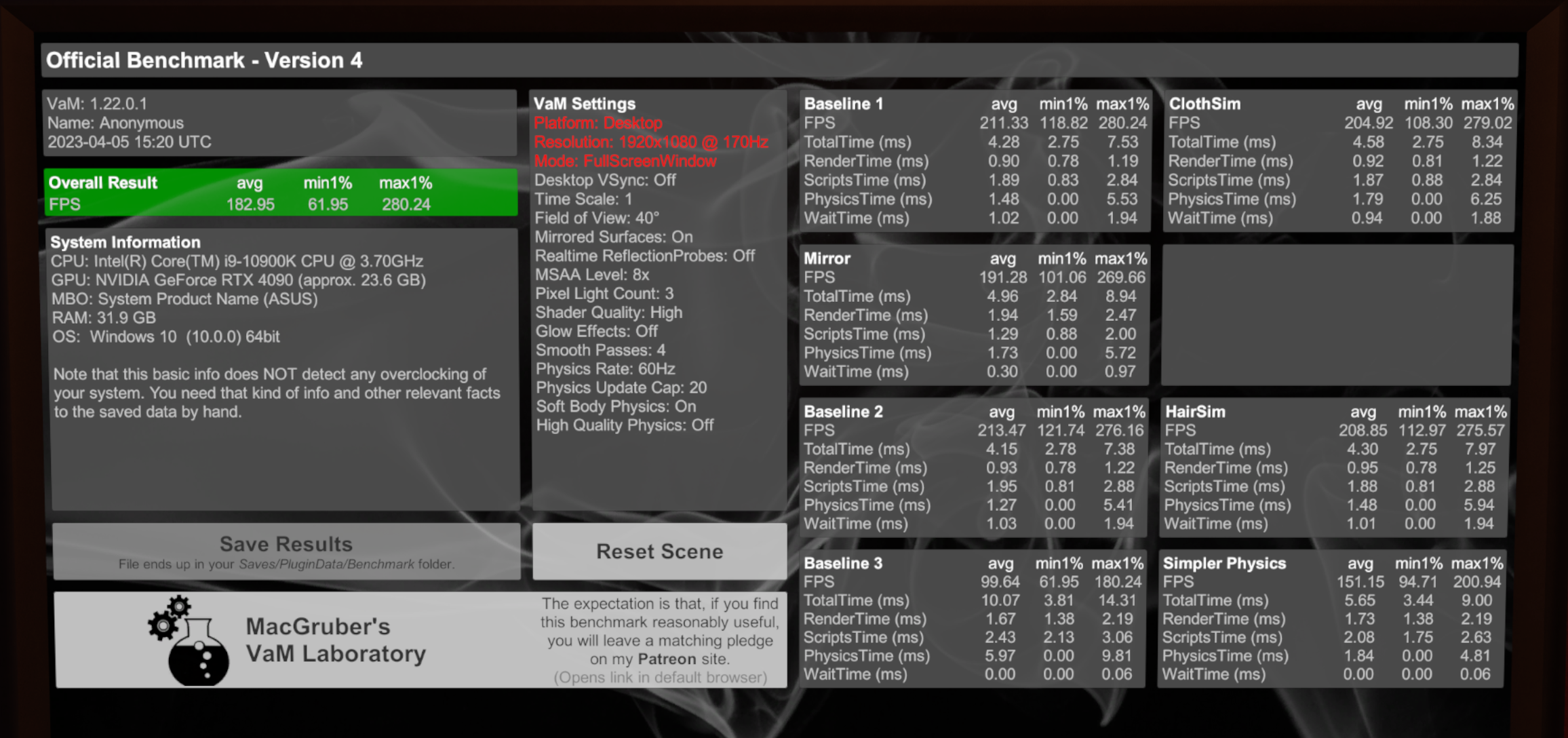

I've tried to directly unzip over 20,000 .var files with 7-zip and throw them directly into the VAM directory to run. In the addonpackages folder, only the .var files that included VAMX and those that existed at the time of installation were retained. Then, I conducted "timing + recording" tests multiple times under the same scene in these two environments. As my background is in statistics, I needed to conduct more than 30 tests for the results to be reliable. With nearly 20,000 scenes existing in the scene browser, the difference in loading time for the same content was about 16 minutes versus 1 minute 30 seconds.

?

The time it took for VAM itself to start and launch the scene browser was about 3 minutes versus 40 seconds.

I work in data analysis and statistics, so I only trust the results of actual tests. You can refer to the EXTRACTOR script I wrote before and the .var file check script I just uploaded. I need some time to think about how to improve the path structure, so I haven't uploaded these recording results yet. But I'm confident that any user can reproduce this difference in operation.

Loading time and memory usage are of course very obvious experiences. However, about 24 hours ago, I tried to load the ALIVE plugin (without UI, only ALIVE) of SPAQ into two people in a scene. ALIVE, in my judgement, is a very complex PLUGIN that operates based on .NET FRAME. When I loaded ALIVE for the second person, the .NET heap sections limit was triggered, and VAM ended. My system memory is 196GB, and the RAM usage at the time was "only 45GB" ? , far from my system memory limit.

From my past experience, this is because .NET Frame has a limit of 1024 heap sections, each heap can store 65,536 objects/morphs. In other words, the upper limit of objects and morphs that the entire VAM software can load is about 67 million. Exceeding this will trigger a Fatal error. I've had similar situations when playing old games from Illusion Co., JP. This is common in the UNITY game development forum. The correct term is "Garbage Collector Unloading Problem", also known as "Data Fragmentation = Optimization Problem".

What I want to emphasize is: I probably know what happened, and maybe I know some small tricks that might improve the situation, but that doesn't mean this situation is easy to handle. Especially "the pre-fetching of files in memory, the usage rate of CPU in managing these data involves statistical "guessing skills"", this skill is very difficult and requires time, manpower and knowledge to carry out smoothly. Therefore, many large-scale production companies are extremely poor in optimization at the time of game release.

In the past, when INTEL was developing CPUs, they directly increased the cache capacity and pipeline length, ignoring the "data pre-read must perform statistical guess accuracy operation". The result was that the CPU of that era was slow, hot and power-consuming due to the negative impact of repeated

calculation and data fetching. The problems that INTEL and current large-scale game development companies cannot avoid, cannot be demanded that VAM will not encounter or can easily handle outside the ROAD MAP, this is really difficult.