SallySoundFX is designed to automate visualizations based on audio currently playing in VAM.

It can listen to a frequency and flash a spotlight with every beat for example.

It can be linked to anything that accepts 'value Actions' from 0.0 to 1.0.

It can use a microphone too.

It works with audio from any kind Unity AudioSource, not with the embedded browser (WebPanel or TV).

Version 2.01

SallySoundFX is now a decoupled Master-Plugin that sends visualization-data to Slave-Plugins.

The demo scene is separate to keep this var-package a small dependency for custom scenes.

You can now add some basic premade visualization by adding a Slave-Plugin to an Atom.

However(!) - THE most powerful feature are still the Trigger 1 / 2 Actions.

Read the "Control the light intensity with every beat"-part below to learn that.

Overview of Audio Visualization-Plugins (AVSlaves):

_________________________________________________________________________________________

Everything below this line was for Version 1. Most info is still relevant tho.

Guides:

Installation from VAM Hub or manual

How to use SallySoundFX in your scene?

How to use a microphone?

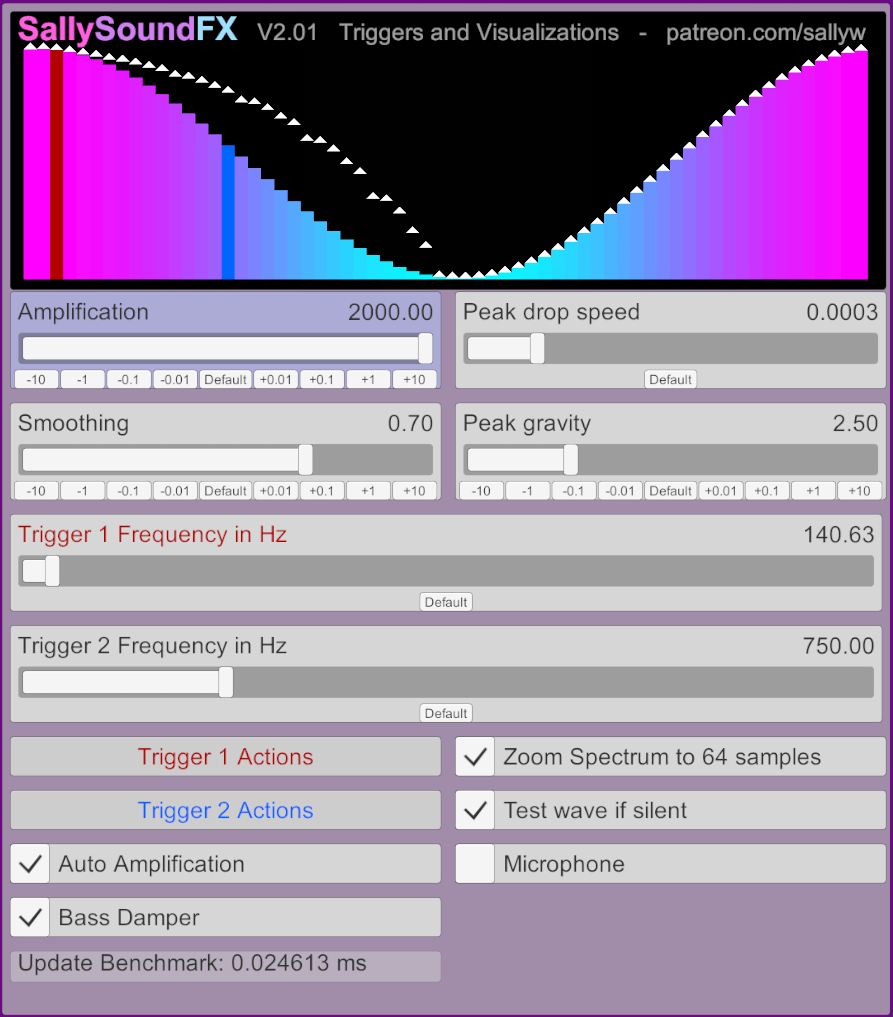

User Interface:

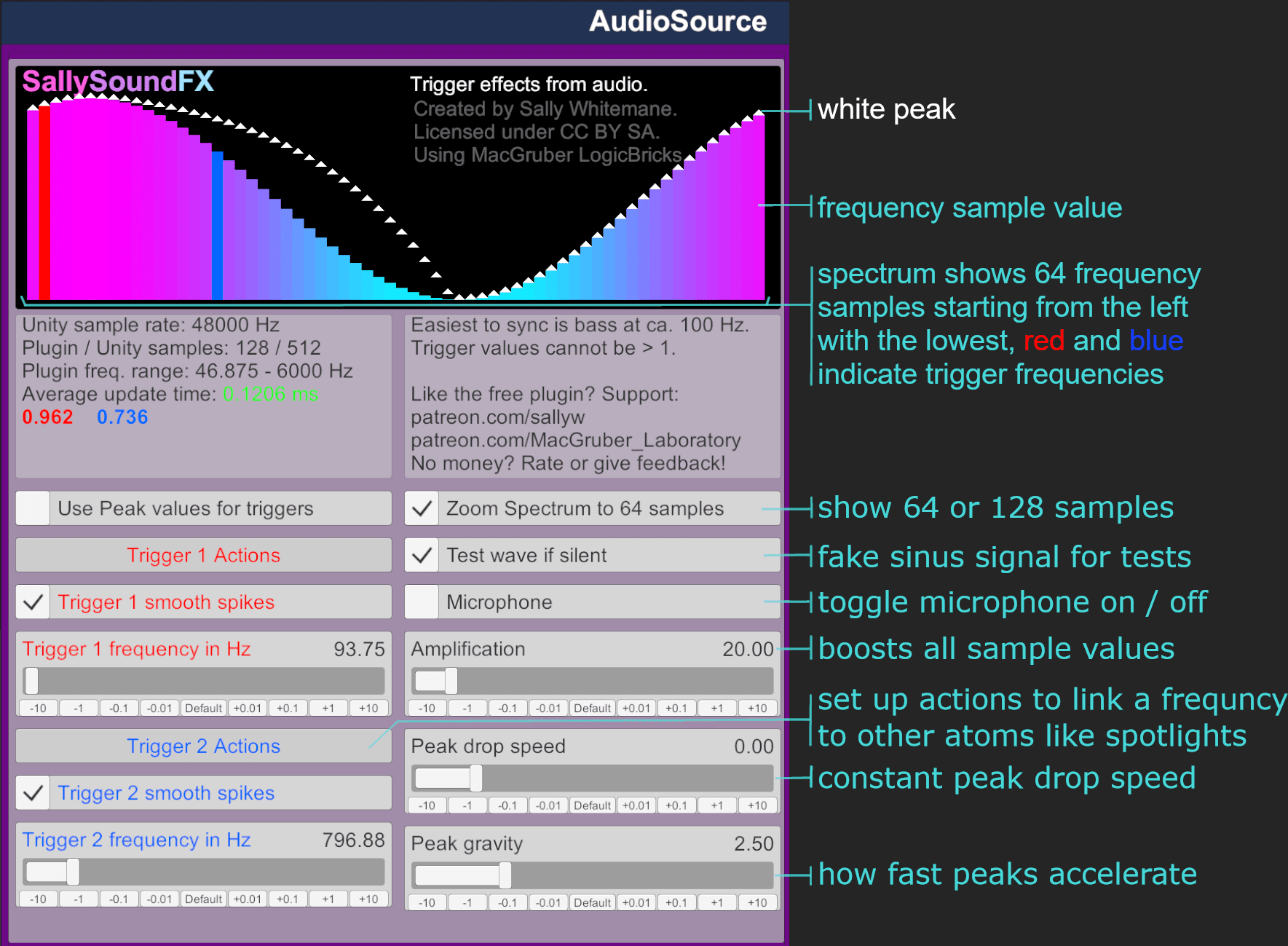

The Plugin UI will show a sinus test wave when no audio is playing by default. This can be turned off. It can be used to test basic scene visualization while not listening to anything. However keep in mind this 'signal' is unrealistic. Real world music will result in different visuals. Therefore it is better to test with real music.

Control the light intensity with every beat:

Performance:

... is not an issue. The plugin is very lightweight and has been tested to ensure high framerates. Processing the Update() call for the Demo scene is done in 0.1 ms / 0.0001 seconds on a Ryzen 7 5800X. CPU-intensive calculations like Mathf.sin()-calls have been optimized to use pre-calculated look-up-tables.

To verify the performance the plugin shows a colored 'Average update time' in milliseconds for the last 80 frames. The time will be yellow if greater than 0.25 ms and red if greater than 0.5 ms.

For coders:

The biggest performance hits are the Trigger()-calls and the required GetSpectrumData()-call. I've tested various FFT-algorithms (Fast Fourier Transform to get the audio sample data from Unity). To my surprise some low quality algorithms actually performed worse for unknown reasons. The Plugin now uses Blackman as a balanced choice between speed and quality. Blackman-Harris is the only higher quality, but slower algorithm. However the biggest performance hit comes from chain-triggering many things.

The Plugin now uses Blackman as a balanced choice between speed and quality. Blackman-Harris is the only higher quality, but slower algorithm. However the biggest performance hit comes from chain-triggering many things.

How it works in detail:

The key for audio visualization is to get the frequency sample data from VAM. Since VAM is build with Unity we can look at it's documentation and find the GetSpectrumData-function. SallySoundFX uses channel 0. That's left. It requests 512 samples. Each sample represents a frequency between 0 and 48000 Hz (Unity's typical AudioSettings.outputSampleRate). This is a good tradeoff between precision and speed. Since high frequencies are less relevant and to speed up later 'signal processing' in CPU intensive loops, only the first 128 samples are used. The remaining 384 are ignored, but they have to be requested to have enough precision for the important low frequencies.

After that it's basically just additional signal processing to make the sample-data usable for good looking visualizations. For example an amplifier is required. All this is happening with every frame and the fast signal changes appear as flickering. Therefore it's a good idea to do some fancy math to smooth the 'signal'.

To make sure the plugin shows correct frequency data it has been verified with 3000 and 6000 Hz sinus-wave files.

Credits:

Sally Whitemane - Creator of SallySoundFX Plugin - https://www.patreon.com/sallyw

MacGruber - Creator of LogicBricks Plugin - https://www.patreon.com/MacGruber_Laboratory

It can listen to a frequency and flash a spotlight with every beat for example.

It can be linked to anything that accepts 'value Actions' from 0.0 to 1.0.

It can use a microphone too.

It works with audio from any kind Unity AudioSource, not with the embedded browser (WebPanel or TV).

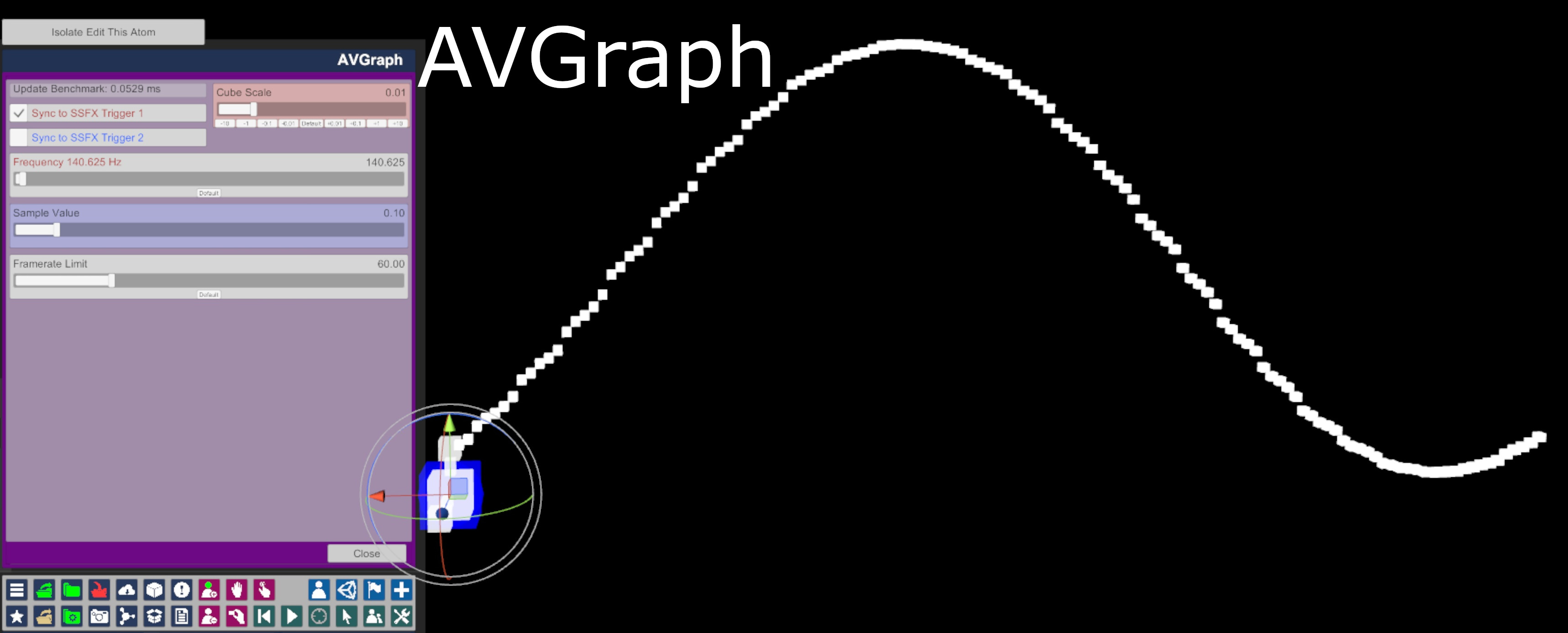

Creates a cube and makes it bounce to the bass on the y axis relative to it's atom position.

https://mega.nz/file/D6YywYqT#oA_GXmnEzkoFEAAJWvKCtLrPgYdnWaPM-Mgbwh7qC3M

-> remember to load the .cslist-file, not the .cs file

To test it's fine to use the example name. If you rename make sure:

-> class name and .cs-file name start with 'AVSLAVE_', otherwise audio sync will not work

-> edit name in .cslist-file to match file name

https://mega.nz/file/D6YywYqT#oA_GXmnEzkoFEAAJWvKCtLrPgYdnWaPM-Mgbwh7qC3M

-> remember to load the .cslist-file, not the .cs file

To test it's fine to use the example name. If you rename make sure:

-> class name and .cs-file name start with 'AVSLAVE_', otherwise audio sync will not work

-> edit name in .cslist-file to match file name

Version 2.01

SallySoundFX is now a decoupled Master-Plugin that sends visualization-data to Slave-Plugins.

The demo scene is separate to keep this var-package a small dependency for custom scenes.

You can now add some basic premade visualization by adding a Slave-Plugin to an Atom.

However(!) - THE most powerful feature are still the Trigger 1 / 2 Actions.

Read the "Control the light intensity with every beat"-part below to learn that.

Overview of Audio Visualization-Plugins (AVSlaves):

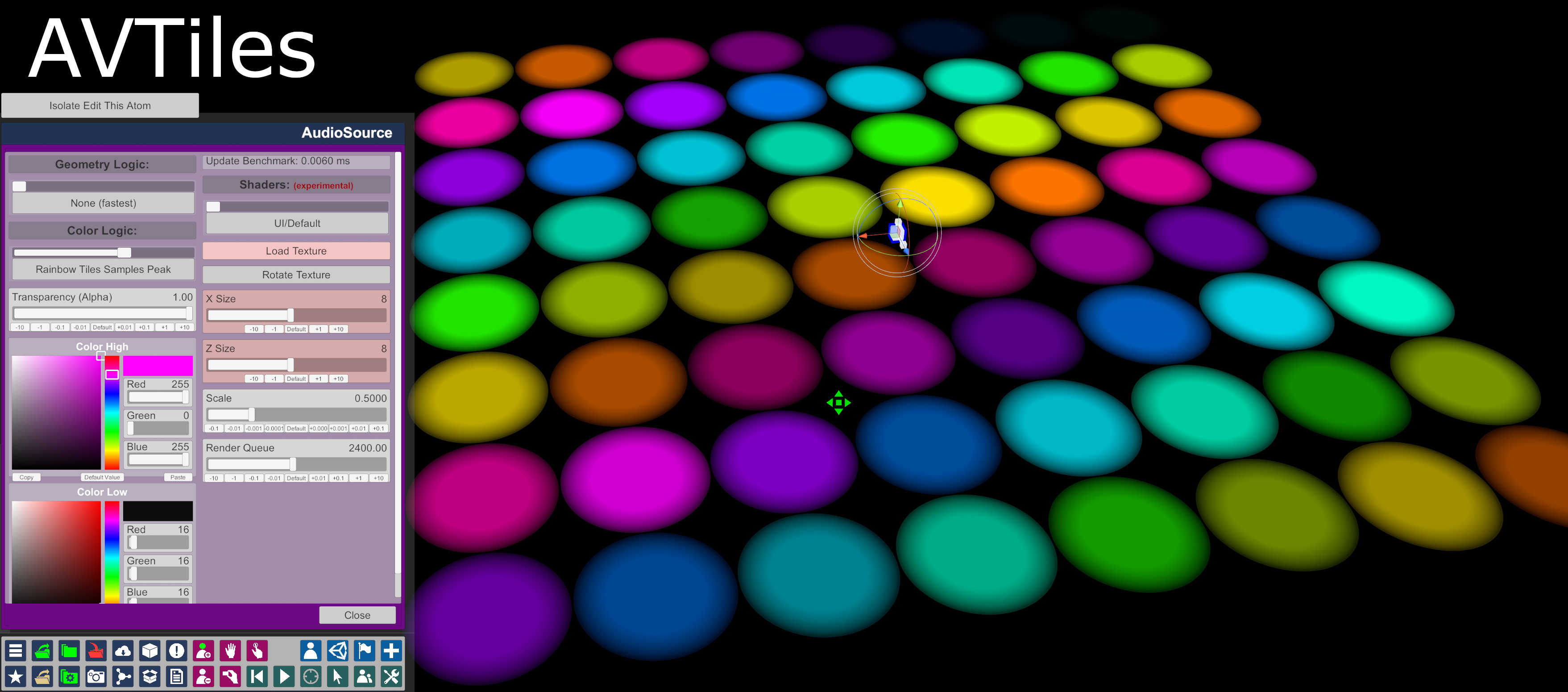

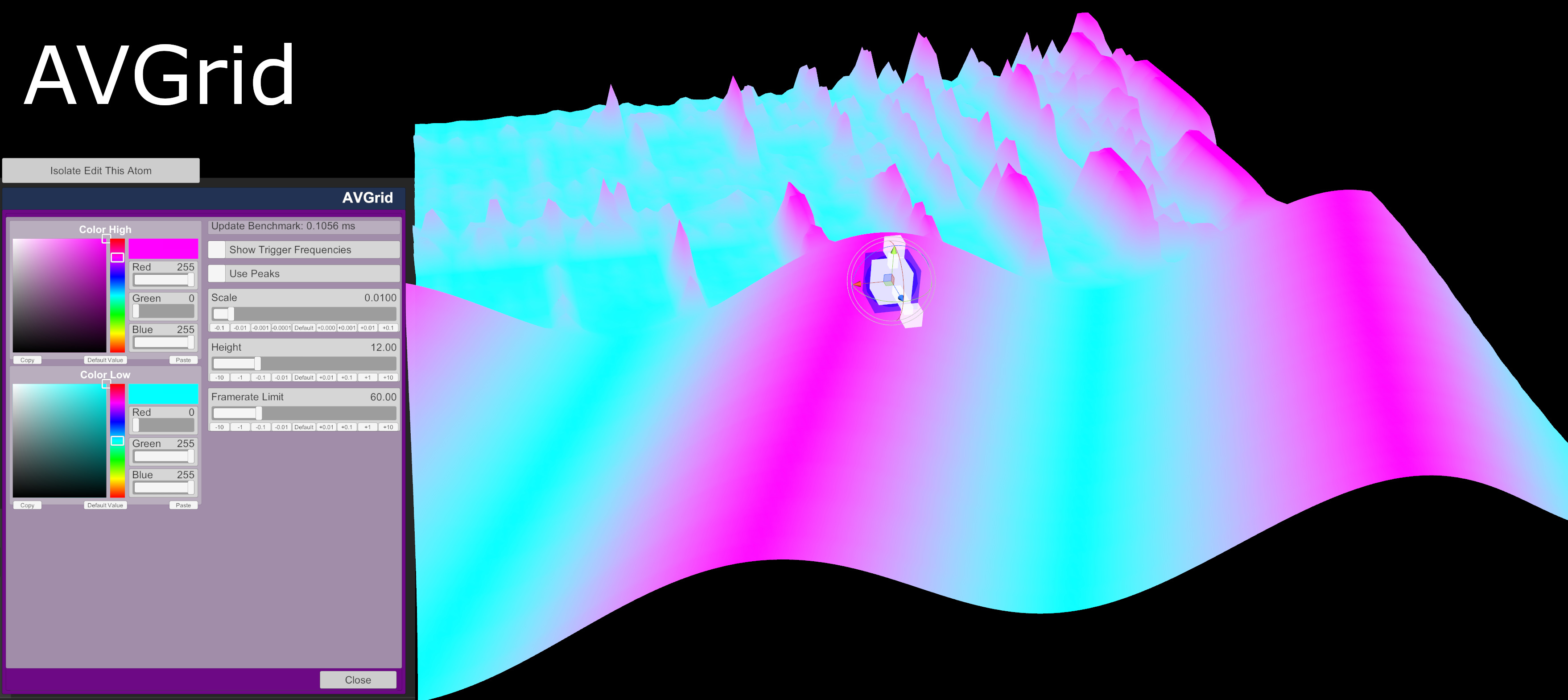

The most customizable visualization so far. This was previously the "dancefloor" from version 1. Allows to load custom textures from other VARs or any other path inside the VaM-folder.

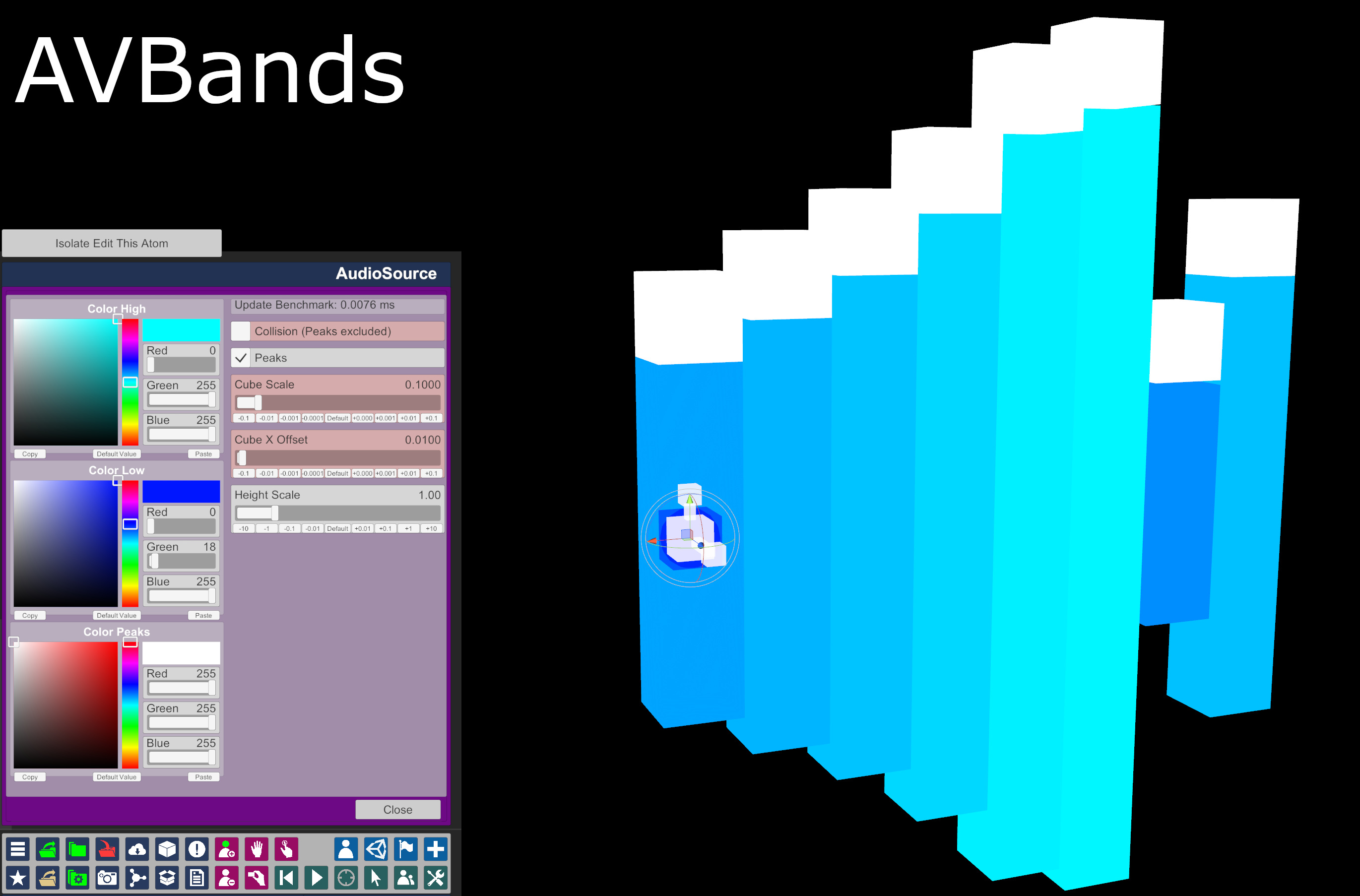

Renders basically 8 cubes that scale with SSFX's frequency bands. Those bands are a simplified representation of the audio currently playing. Their values are:

- band 0: 2 samples 0-1 average - bass

- band 1: 2 samples 2-3 average

- band 2: 2 samples 4-5 average

- band 3: 4 samples 6-9 average

- band 4: 6 samples 10-15 average

- band 5: 16 samples 16-31 average

- band 6: 32 samples 32-63 average

- band 7: 64 samples 64-127 average - high

This is a dummy Visualizations. It is intended for developers. It does not render anything. Instead it shows the AVD (Audio Visualization Data) send from SSFX in the Plugin User Interface.

Spawns 128 primitive cubes from a given frequency and moves them with each update.

Very experimental visualization. Renders one 128x128 mesh similar to a terrain or water surface. Would not recommend to use it yet due to slower performance, but I can't stop you. This badly needs a better shader.

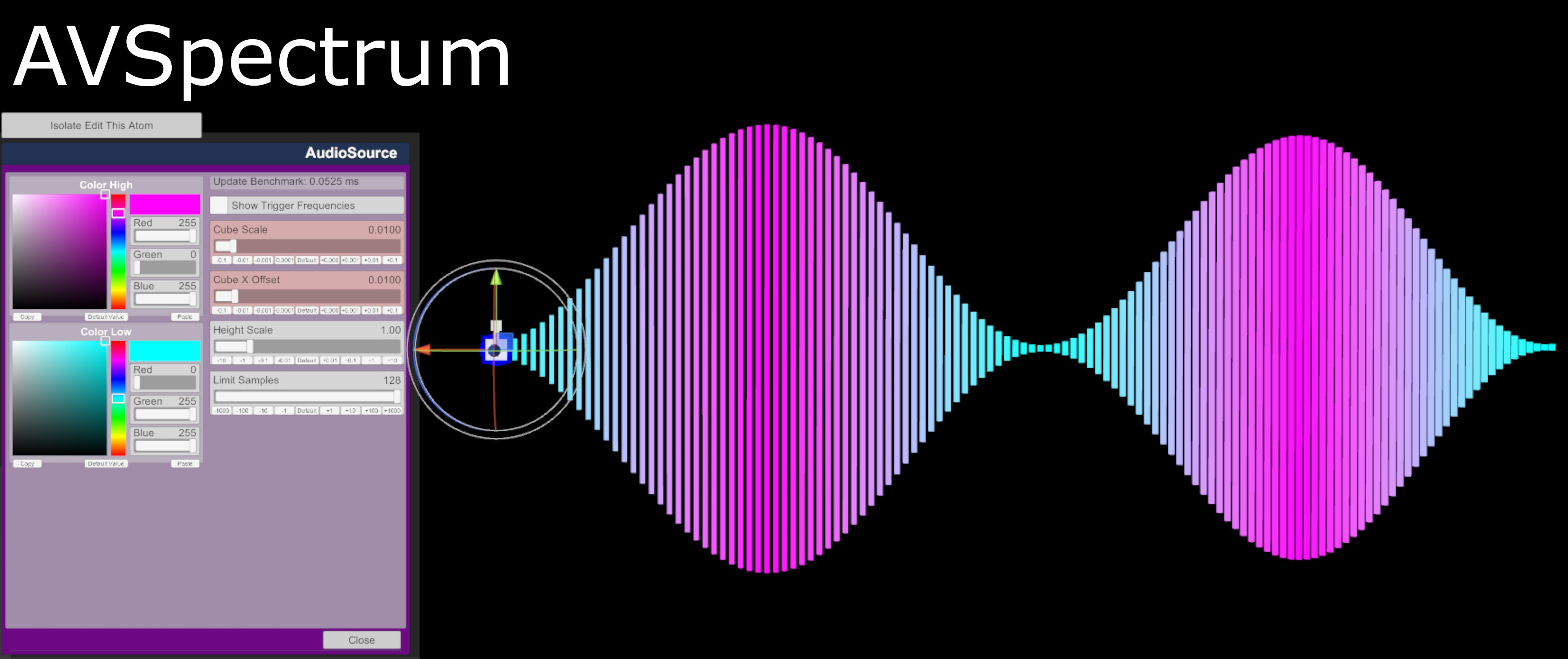

Simply renders all 128 sample values as scaled primitive cubes with some color slapped on them.

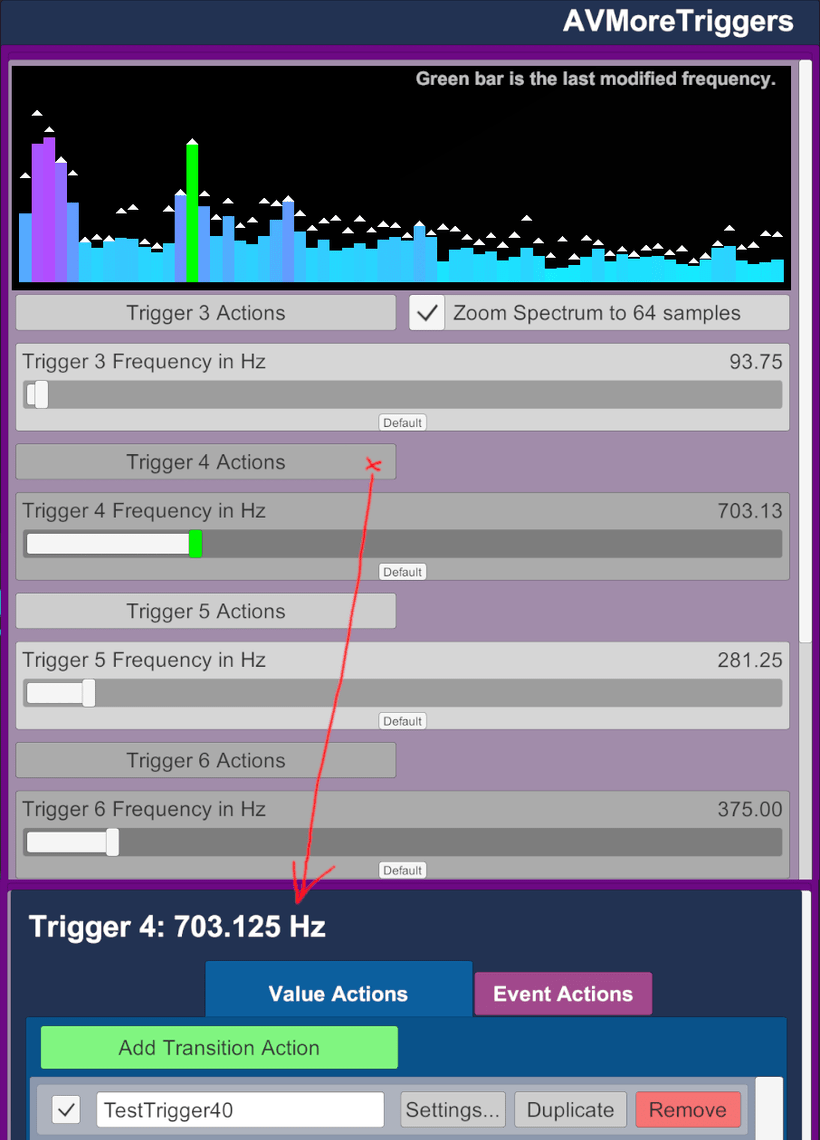

If 2 triggers from the SSFX Master aren't enough this one adds 8 more. It does not render anything in 3D space. (added in version 2.02 / Sally.SallySoundFX.4.var)

Everything below this line was for Version 1. Most info is still relevant tho.

VAM can do only basic audio visualizations based on the volume. (As far as I know)

Therefore SallySoundFX is more accurate. A 'SallySoundFX Demo'-scene is included:

The dancefloor is an experimental first visualization based on (not yet accessible) frequency bands. To keep the plugin lightweight I've only used build-in content. The dancefloor is not a resource. It is created dynamically at runtime. It's size, position and color effect can be changed. For the future I want to provide various prebuild visualizations and templates (once I've improved some internal signal processing).

Therefore SallySoundFX is more accurate. A 'SallySoundFX Demo'-scene is included:

The dancefloor is an experimental first visualization based on (not yet accessible) frequency bands. To keep the plugin lightweight I've only used build-in content. The dancefloor is not a resource. It is created dynamically at runtime. It's size, position and color effect can be changed. For the future I want to provide various prebuild visualizations and templates (once I've improved some internal signal processing).

Installation from VAM Hub or manual

How to use SallySoundFX in your scene?

How to use a microphone?

User Interface:

The Plugin UI will show a sinus test wave when no audio is playing by default. This can be turned off. It can be used to test basic scene visualization while not listening to anything. However keep in mind this 'signal' is unrealistic. Real world music will result in different visuals. Therefore it is better to test with real music.

Control the light intensity with every beat:

- Move the Trigger 1 frequency slider to whatever frequency you want to 'react' to. For typical music the easiest is to start with a low 100 Hz Bass.

- Trigger 1 Actions

- Add Transition Action

- Settings ...

- Receiver Atom: <your light>

- Receiver: Light

- Receiver Target: Intensity

- (Start) intensity: 0

- (End) intensity: 1 or whatever you want to have as maximum

- OK

- [optional] Enter a descriptive name for this Action: "set light intensity by beat"

- Done

Performance:

... is not an issue. The plugin is very lightweight and has been tested to ensure high framerates. Processing the Update() call for the Demo scene is done in 0.1 ms / 0.0001 seconds on a Ryzen 7 5800X. CPU-intensive calculations like Mathf.sin()-calls have been optimized to use pre-calculated look-up-tables.

To verify the performance the plugin shows a colored 'Average update time' in milliseconds for the last 80 frames. The time will be yellow if greater than 0.25 ms and red if greater than 0.5 ms.

For coders:

The biggest performance hits are the Trigger()-calls and the required GetSpectrumData()-call. I've tested various FFT-algorithms (Fast Fourier Transform to get the audio sample data from Unity). To my surprise some low quality algorithms actually performed worse for unknown reasons.

How it works in detail:

The key for audio visualization is to get the frequency sample data from VAM. Since VAM is build with Unity we can look at it's documentation and find the GetSpectrumData-function. SallySoundFX uses channel 0. That's left. It requests 512 samples. Each sample represents a frequency between 0 and 48000 Hz (Unity's typical AudioSettings.outputSampleRate). This is a good tradeoff between precision and speed. Since high frequencies are less relevant and to speed up later 'signal processing' in CPU intensive loops, only the first 128 samples are used. The remaining 384 are ignored, but they have to be requested to have enough precision for the important low frequencies.

After that it's basically just additional signal processing to make the sample-data usable for good looking visualizations. For example an amplifier is required. All this is happening with every frame and the fast signal changes appear as flickering. Therefore it's a good idea to do some fancy math to smooth the 'signal'.

To make sure the plugin shows correct frequency data it has been verified with 3000 and 6000 Hz sinus-wave files.

Credits:

Sally Whitemane - Creator of SallySoundFX Plugin - https://www.patreon.com/sallyw

MacGruber - Creator of LogicBricks Plugin - https://www.patreon.com/MacGruber_Laboratory

Compared to the one I posted in a video earlier it makes the colors look more washed out / worse.

Trying to build a custom Shader for SSFX right. Need better materials before fiddling more with Visualizations.