I have this same question:

" Is there any link for a tutorial explaining how to do your "training AI" process? it looks very interesting approach. "

(hopefully this is useful and in line with the request... i'm off meds again, so i ramble)

I suppose i could write something, i just kinda wing it each time, which also gives me a more unique result with each go through.

Facegen Tips

Depending on your intent, if just for faces for example, get a bunch of pictures of the face, from multiple angles. The more the better. If they are under 768x768, i would bring them in to stable diffusion and upscale them and once you have a satisfactory result, save that image and use it. I have had a lot of success with this approach. As for the LORA used, i really have to go through and find the closest matching one, i tend to get all of the ones i use off of

http://www.civitai.com/ and make sure they are public domain type loras.

The best results are not from a single attempt. To get my favorite faces, i generally do about 5 different rounds of FaceGen, using different reference photos of the same person. Make small adjustments to get as close to possible to the original. It'll never be 100%, but that's where time and effort come into play, as you will need to tweak them in DAZ and VAM. Save these generated facegen morphs, and publish to DAZ.

You only need one good texture, so you can just save the morphs for the others if you want, but you could also average all 5 generations into one image, too, in PS or IM.

Once you have a good set of morphs that all have something that you like and resemble your target, you can go copy them to VAM, then use the sliders to adjust each one to 1/x of the amount (x being the number of morphs you created).

Example: If i generated 3 morphs, I'll set all to 33% (0.33). The look gets really close, but nothing is ever perfect. Play with the sliders, but try to get them to total to 1.0, doesn't have to be exact. This is math/art so yeah, there is a bit of end user flair you have to add.

Then go in and do the fine tuning of each element, Eyes, cheeks, nose, mouth.

I think the eye, nose, mouth relationship and ratios are the most important personally.

There is no perfect way, 100% easy method to do this, it takes effort and time to make something you like, don't quit early, and save iterations as you go. Save, tweak, save, tweak etc... create a project work folder in VAM for this, so you can always roll back to something if you get too far off path.

Also, you can use inpainting to enhance or touch up your images too... the whole point is this is a tool, with many accessories, and 100s more to download or add on to StableDiffusion. Put your mind to it, you can pretty much make anything look like anything, from anything, it's mind breaking.

Once you get it the way you like, then use something like

@ceq3 's

Morph Merge and Split to create head, body and gen morphs as single morphs. I do multiple iterations here too.

AI TRAINING TIPS?

The training really can be summed up with all the tutorials that youtube offers, some are shit though, just some jackass repeating what the better tubers explain.

The key is to grab a lot of images, pick the best 15 to 30 images, make sure to have multiple different backgrounds and outfits, so that it knows when you train the image, the face is the common point, the other stuff is extra.

Create captions for each image, detailed captions, regardless of what the fools on the tutorials say about a generic one. If you have 10 images, they all have different outfits and backgrounds, i would describe each image with details about the backgrounds, the clothing, but the subject/person would be the keyword. It's the same person through all the images, so like

KeiraK05 would be useful to put in each description as the first key word. Basically your telling that all the images have different backgrounds, clothing, hair style, facial expressions, etc.. but

KeiraK05 is in all of these, it's the common factor of the LORA, so when i generate an image using this lora, and i say:

KeiraK05 can be any word or phrase, as long as it isn't like a common phrase. Don't use Girl, or MatureWoman, as those will definitely be somewhere in any checkpoint you use.

upper body,realistic,white background,standing on a porch,in a evening gown,at night,snow on eaves,log cabin,

<lora:ma-lanarose-000004:1>,lanarose,

it'll generate the person from the lora, since we named the lora, and the keyword and add the other items. ^^i even generated 'prints' in the snow... nice...

lanarose,girl,solo,upper body,realistic,cutoff shorts,horse farm,barn door,daytime,

<lora:ma-lanarose-000004:0.8>,

lanarose,girl,solo,upper body,bikini,swampland dock,

<lora:ma-lanarose-000004:0.8>

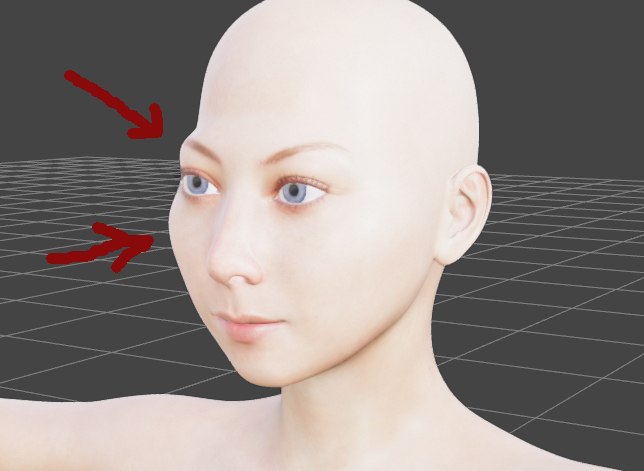

Just from these examples, (which aren't great, but show the flexibility of proper training) you can see the resemblance between all 3 persons, with all different backgrounds and outfits. with no weird distortions or unwanted items. Below is an example (left side) of images all trained from a day at the beach. Right side, same prompt, but lora from above.

I had one lora, one of my first, that i only had shots of a person on a beach, lots of sand and hotels and umbrellas in the backgrounds. I was noob at the time, I didn't know what i did wrong, but every thing i generated ended up with sandy backgrounds, even inside shots, always had a hotel or a building in the far off distance. Since they were all the same type of photo, then the lora lumped them all in with the common keyword. So, having different backgrounds is very help ful. no matter what i did when in rendered, it always had a beachy background.

Trained on all beach images ....

bedroom,<lora:LucyJMajor05:1>,lucyjmajor person,tanktop, jeans

| Trained with multiple images, from different locations, and clothing....

bedroom,<lora:ma-lanarose-000004:0.8>,lanarose, tanktop, jeans

|

The

Highlighted text in the prompt is the KEYWORD for the lora, the item in the

<lora:name>'s is the reference to the lora.

You can use the builtin tagger in Kohya-SS to generate captions, but make sure you match the extension of the captions with the training settings tab (it'll say like default extension is .caption, but if you create all imageName.txt files, for imageName.jpg/png files then make sure to up date that to .txt otherwise it will all be ignored, and you'll not have a key word.

Review the captions of each file before you train, sometimes, it's really off.

I generally do 40 to 60 iterations of each image (set in the folder name, but Kohya-SS now has a data preparation tab as well, that will help you with that)

Do 10 epochs, (10 save points), and watch the samples as they come through to know when to stop training ... again look for youtube vids on references to this for now.

Number of steps to train the whole thing might say like 30,000 steps, and if you are doing 10 epochs, you know at every 3,000 steps a 'save'/checkpoint will be generated in your model folder. There's some math to all this too, also covered in some of the better videos online, but don't get to wrapped up in it, the output is really what you want to watch, the samples. there are some good tips on the sampling too, like in the sample prompt, give it blue hair, and once the hair starts to change from blue to the persons hair color, you probably are close to the perfect state. note the iteration and determine which epoch that would be.

Those should be some good starters to get some of you going, i could write more, but i've already gone too far with this post, TMI maybe... ADHD definitely. Maybe one day i could do a my how-to-guide for this, basically just what i take from all the reading and videos i've ingested and pulled out what really works.

Both LORA's used here are ones i trained on my PC...

(neither available for distribution, so don't request them please) and depending on your PC specs, this takes about 30 minutes to 1.5 hours, and that is determined by the settings i used in KOHYA-SS (Learning rate, batch, threads etc..) And i think i have a pretty beefy rig, so it may take longer for others, and if you don't have NVIDIA, prepare to spend days. JK, i have no idea what others do, but you can use CUDA, transfomers and other NVIDIA specific accelerations. I'm not sure how RYZEN works with them either.

Processor 12th Gen Intel(R) Core(TM) i9-12900K 3.20 GHz

Installed RAM 64.0 GB

GPU NVIDIA GeForce RTX 3080 Ti 12 GB

3 x M.2 nvme drives for cache, app, and OS.

8 x SSD for storage and games

1 M.2 NVME just for VAM