Someone has put a demo for it on reddit:

Update 3:

This new version works out of the box, you may need to allow Internet access to the plugin as well as whitelist this domain name: ec2-52-87-238-187.compute-1.amazonaws.com

If you are the only user, the interaction should feel like it is real time.

Emily can repeat herself, she is still learning.

Will post an update to the Github repository once everything is ready.

The server contains these services:

1- Speech to text.

2- Text to Speech.

3- Chatbot.

4- NPC actions.

5- Chatbot choices.

Triggers are not working yet.

Please enjoy while the server is not full.

Update 2:

I have provisionned an AWS server for around 60$, it is a bit slow (~5s to respond), please only use if for testing, for better performance, please run the local servers.

You still need to use your OpenAPI key though.

Update 1:

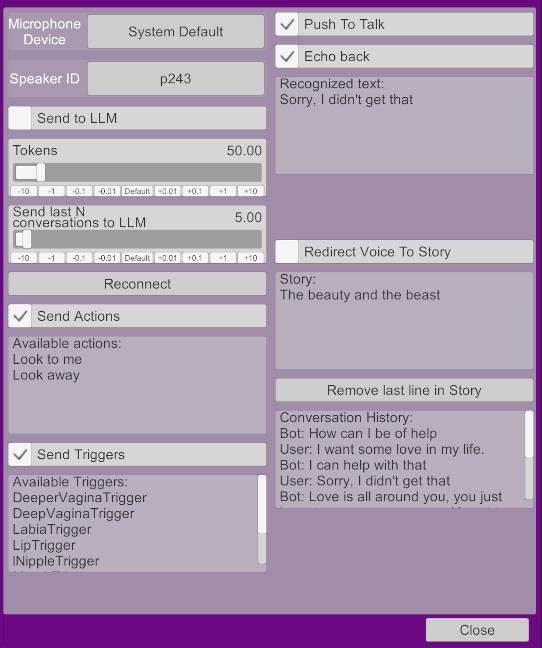

Basically you just talk and the bot responds, you can ask it to do some actions and it responds to touch.

The plugin looks for actions in: Scene Animation -> Triggers -> Add Animation Trigger -> Actions...

Please provide action names that can be recognized by the LLM.

I am new to VAM (as a creator).

This is meant as a developer preview plugin to help us get started on using large language models, but you can enjoy it as you wish.

To use this plugin, you need to follow the instructions on the Github page to run the needed local servers:

github.com

github.com

Everything is open source (MIT), except for the lipsync plugin that we have to use for the moment, will be developping a new open source one, big thanks to the developer of lipsync.

How to use in game:

Push to talk: right click on mouse, or trigger on VR.

Send to LLM: sends your inputs toOpenAI.

Echo back: will repeat what you have said (recognized text).

Redirect Voice to Story: to write your own story using your voice.

This first version is only meant to be used by technical people and content creators.

I will be working on a version that works out of the box, but needs funding for the servers.

For the moment, we have 3 servers: the node orchestrator, Text To Speech server and Speech to Text server.

Will be adding another open source Large Language model server soon.

Some stats:

- Speech recognition + Speech generation => 1s.

-OpenAI response => 2s to 5s.

- UsingOpenAI for a one hour session would cost around 1$ (with current config).

- The 3 servers are consuming around 6Go of RAM.

Still facing some performance issues especially a frame lag when the Microphone recording starts.

Please support me on Patreon: https://www.patreon.com/TwinWin, if you are a developer, please join me in making this happen; if you are a prompt engineer please share with us your prompts.

Here is my discord server: https://discord.gg/GqN3STn8U8

Update 3:

This new version works out of the box, you may need to allow Internet access to the plugin as well as whitelist this domain name: ec2-52-87-238-187.compute-1.amazonaws.com

If you are the only user, the interaction should feel like it is real time.

Emily can repeat herself, she is still learning.

Will post an update to the Github repository once everything is ready.

The server contains these services:

1- Speech to text.

2- Text to Speech.

3- Chatbot.

4- NPC actions.

5- Chatbot choices.

Triggers are not working yet.

Please enjoy while the server is not full.

Update 2:

You still need to use your OpenAPI key though.

Update 1:

Basically you just talk and the bot responds, you can ask it to do some actions and it responds to touch.

The plugin looks for actions in: Scene Animation -> Triggers -> Add Animation Trigger -> Actions...

Please provide action names that can be recognized by the LLM.

I am new to VAM (as a creator).

This is meant as a developer preview plugin to help us get started on using large language models, but you can enjoy it as you wish.

To use this plugin, you need to follow the instructions on the Github page to run the needed local servers:

GitHub - collant/vam-orchestrator: An orchestrator for VAM Imposter plugin. My patreon: https://patreon.com/TwinWin

An orchestrator for VAM Imposter plugin. My patreon: https://patreon.com/TwinWin - collant/vam-orchestrator

Everything is open source (MIT), except for the lipsync plugin that we have to use for the moment, will be developping a new open source one, big thanks to the developer of lipsync.

How to use in game:

Push to talk: right click on mouse, or trigger on VR.

Send to LLM: sends your inputs to

Echo back: will repeat what you have said (recognized text).

Redirect Voice to Story: to write your own story using your voice.

This first version is only meant to be used by technical people and content creators.

I will be working on a version that works out of the box, but needs funding for the servers.

For the moment, we have 3 servers: the node orchestrator, Text To Speech server and Speech to Text server.

Will be adding another open source Large Language model server soon.

Some stats:

- Speech recognition + Speech generation => 1s.

-

- Using

- The 3 servers are consuming around 6Go of RAM.

Still facing some performance issues especially a frame lag when the Microphone recording starts.

Please support me on Patreon: https://www.patreon.com/TwinWin, if you are a developer, please join me in making this happen; if you are a prompt engineer please share with us your prompts.

Here is my discord server: https://discord.gg/GqN3STn8U8

If still is not working, it might be an issue with the configuration of your microphone that is not supported, can you try with another microphone?